- LIDAR annotation for AI helps transform unstructured, raw three-dimensional point cloud data generated by LIDAR sensors into organized, labeled 3D datasets.

- Specific methods applied to this task include bounding box annotations, semantic segmentation annotations, and temporal tracking for spatial reasoning.

- Professional data annotation services offer customized solutions to support complex projects with domain specific knowledge and quality control workflows.

Table of Contents

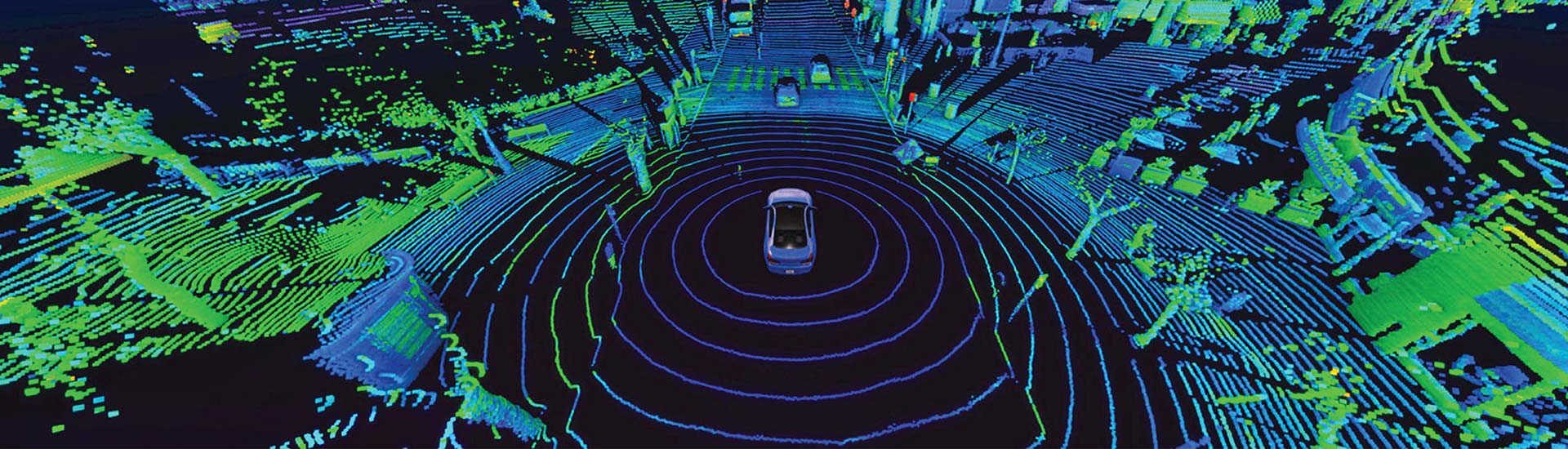

Current artificial intelligence systems often struggle to adequately process the large amounts of unprocessed raw spatial data coming from three-dimensional environments through their sensors. Millions of spatial points are generated using a LIDAR sensor data. However, these vast amounts of raw data cannot be used as training data for machine learning algorithms until they are properly organized.

The global LiDAR market is growing from what was estimated to be worth approximately $3.01 billion in 2025 now projected to grow to over $9.68 billion by 2032.

LIDAR annotation for AI converts unorganized, labeled or unlabeled point cloud data from sensors to an organized, labeled LiDAR datasets, which enables perception systems such as those used in self driving cars to determine how to drive safely, robots to create accurate maps of physical spaces and cities to accurately measure and maintain their infrastructure.

What is LiDAR annotation?

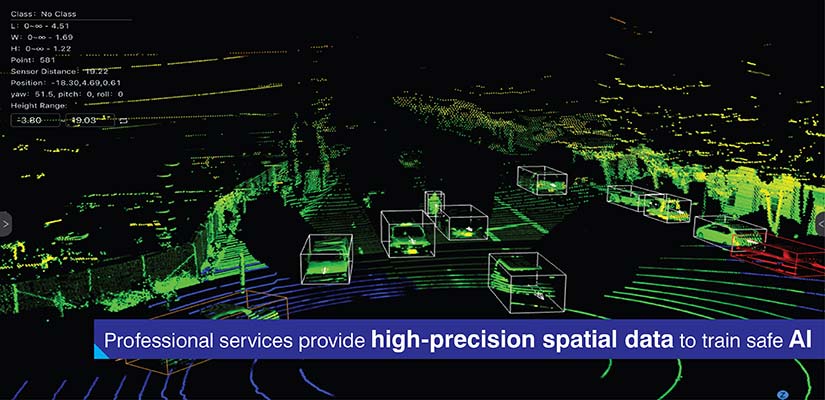

LiDAR data annotation involves methodically assigning labels to three-dimensional point cloud data so that it can be used with machine learning models. As opposed to 2D image annotation, which occurs within flat pixel space, 3D LiDAR annotation is required to label volumetric coordinates, where each point has specific x, y, z coordinates.

The added dimensionality increases the complexity of visualizing the data, making it harder to understand the relationships between different parts of the point cloud.

Point cloud data are often represented using custom formats (e.g., PCD, LAS and Velodyne binary), requiring professional annotation tools for LIDAR to support these and provide the ability to rotate, zoom in/out and view from multiple angles to accurately annotate 3D coordinates.

This is supported by a large economic engine driving the demand for data annotation tools, with the current value of the global data annotation tool market is expected to reach $1.69B in 2025 and is projected to grow to $14.26B by 2034.

This data annotation process includes a variety of unique methods:

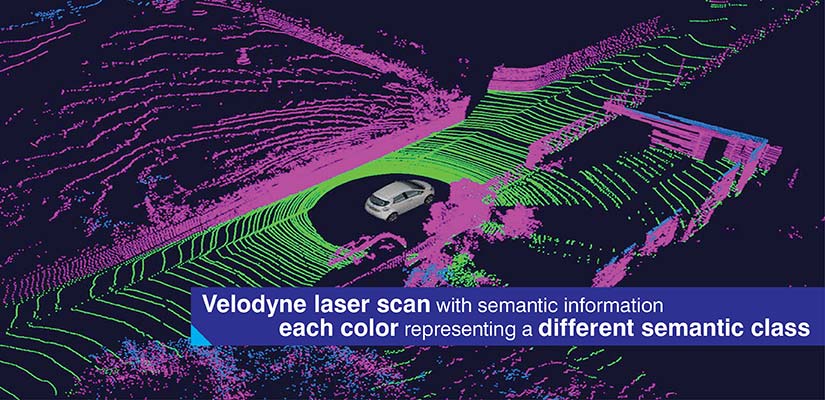

- 3D bounding boxes: These are cuboid shapes around objects such as vehicles, pedestrian and infrastructure.

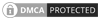

- Semantic segmentation: Individual points are labeled by their semantic class (i.e., road surface, vegetation, buildings, vehicles).

- Instance segmentation: Each object of the same class is identified for individual tracking purposes.

- Polylines & lane markings: These include linear features that enable autonomous navigation like road boundaries and lane divisions.

- Object tracking over time: Objects are tracked through sequential LiDAR frames to identify patterns of movement. Presently, benchmarks for this type of system such as the enhanced DeepSORT algorithm demonstrate a Multiple Object Tracking Accuracy (MOTA) of about 66% when using fused data.

LIDAR training data preparation specialized knowledge beyond basic image labeling services, as it involves annotating 3D point clouds and spatial data.

Why LiDAR annotation matters for AI applications

Annotated LiDAR point clouds are valuable for various use cases:

| Application Domain | Key Capabilities | Critical Functions |

|---|---|---|

| Autonomous Vehicles | Object detection, free space estimation, dynamic obstacle tracking | Identify vehicles, cyclists, pedestrians with spatial precision; distinguish drivable surfaces; predict trajectories |

| Smart Cities | Infrastructure mapping, pedestrian flow analysis, traffic monitoring | Catalog poles, signals, signage; understand movement patterns; measure vehicle counts without privacy concerns |

| Robotics | Navigation, obstacle avoidance, SLAM validation | Distinguish traversable terrain; identify dynamic obstacles; validate spatial reconstructions |

The ability of computer vision LIDAR labeling provides additional spatial resolution and three-dimensional volume to data captured by cameras alone. Cameras collect very detailed visual information, but do not measure distances directly. The inability of cameras to determine scale creates ambiguity in featureless environments, such as vast open spaces or flat surfaces, such as parking lots.

The millimeter-level accuracy of LiDAR provides several unique benefits:

- Accurate distance measurements: Direct measurement of range reduces the error in estimating depth in single camera vision systems. Studies show that the combination of LiDAR and cameras have reduced rear end collision rates by 50 percent vs. human drivers.

- Volumetric object representation: Three dimensional bounding boxes represent the true spatial extent and dimensions of objects.

- Material independent detection: Performance does not degrade with changing light levels, shadows or texture of surfaces.

Using LiDAR annotation services provides AI systems with greater knowledge of the environment and will allow them to make better decisions. Annotated LiDAR datasets provide ground truth spatial relationships among objects, surfaces and infrastructure elements.

Perception models trained using precise labels of point clouds perform well in scenarios where visual systems fail. Research indicates that sensor fusion can improve pedestrian detection by 29.2 percent in complex scenes.

This enables better autonomous navigation, more accurate spatial analysis and more robust system performance under real world operating conditions.

Key use cases of LiDAR annotation in AI and ML

LiDAR is used to create different types of datasets which provide a variety of solutions for various industry specific operations that have a need for highly accurate spatial information.

Autonomous vehicle training

Autonomous systems combine together camera images, radar returns and LiDAR point clouds to create a single representation of their environment. To accomplish multi sensor fusion, all data types are required to be aligned to allow for annotations across data types. LiDAR is responsible for establishing ground truth as it provides precise spatial measurements.

Occlusion object detection illustrates LiDAR’s strength. In cases where vehicles block cyclist visibility from cameras, LiDAR can still detect 3-dimensional profiles of the cyclist.

The training datasets contain extensive annotation of moving vehicles using bounding boxes that are precisely defined to show the orientation, dimensions and location of each vehicle at every frame of video.

Lane and road mapping

High-definition maps rely on the precise annotation of road geometry. Lane boundaries will have polyline annotations that illustrate the visual markings on the lane and the implied continuation of those markings through intersections.

Crosswalks, medians and merge zones are annotated with specific labels that represent the semantic meaning and spatial extent of these features. This feature feeds route planning algorithms that understand what are allowable paths along lanes and lane connections.

Semantic segmentation in point clouds

The ability to make environmental distinctions at a point level using semantic segmentation enables a far more detailed understanding of a scene than simple object detection. Individual elements such as roads, sidewalks, vegetation, poles, and building façade components can be uniquely labeled while preserving their precise spatial relationships. Architectures like PointNet and KPConv learn directly from labeled 3D coordinates, allowing the resulting models to segment new point clouds with the same fine-grained detail.

Architectures such as PointNet and KPConv can learn directly from labeled 3D coordinates. The resulting models segment new point clouds with the same level of granularity.

Robotics and drones

Warehouse robot indoor navigation systems depend on annotated datasets of the environment in which they operate. Survey shows that 42% of the market for single-line LiDAR sensors are now accounted for by the robotics and automation industry.

Labels are needed for storage racks, pallets, doorways and other dynamic obstacles to model accurately the space in which these exist. Aerial LiDAR annotated datasets are used for outdoor drone applications such as inspecting power lines and mapping terrain.

SLAM validation uses annotated datasets that provide ground truth for comparing the correctness of a localization estimate. Researchers test the performance of SLAM algorithms using labeled sequences in which the position and trajectory of objects are known to be correct.

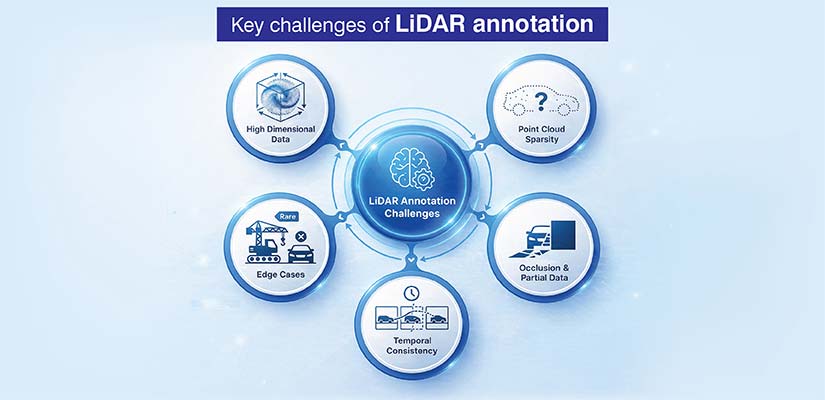

Challenges in LiDAR annotation and how to solve them

LiDAR based use cases necessitate very high levels of quality for annotations but there are inherent LiDAR data attributes that create barriers which can be systematically addressed.

Point cloud annotation has several inherent barriers:

-

High dimensional data: The dense point clouds from urban environments have millions of points, making it difficult to navigate while maintaining awareness of the spatial relationships between points at different viewing angles.

Solutions: The specialized visualization tools and annotator training help develop spatial reasoning skills in three dimensions.

-

Point cloud sparsity: Longer range sensors often produce sparse point clouds, and the object boundaries are ambiguous due to insufficient data. Therefore, an annotator is required to interpolate object boundaries using incomplete data.

Solutions: Annotators who have experience interpreting sparse data and using contextual clues will be able to make informed decisions.

-

Occlusion and partial data: Occlusions occur when physical obstructions interrupt the laser beam, resulting in shadow areas where the object appears fragmented.

Solutions: Annotators with domain specific knowledge can determine the full extent of the object boundaries by examining the visible parts of the object.

-

Temporal consistency: When scanning sequentially, annotators must maintain temporal consistency with respect to labeling and object identifier tracking as the objects move and rotate over time.

Solutions: Automated checks for inconsistencies identify discontinuities and annotators manually review the changes to ensure the correct identification of objects over multiple frames.

-

Edge cases: Emergency vehicle and construction equipment types are examples of rare object categories that require special attention to label correctly.

Solutions: A statistical sample verifies the distribution of labeling and senior annotators perform a detailed review of edge cases.

Quality assurance at Lidar annotation services occurs at multiple levels of verification. The use of automation and human in the loop allows for both the automated suggestion of initial labels and the preservation of human judgment in refinement and quality verification.

How professional LiDAR annotation services add value

Annotators use customized workflow to meet the needs of each industry. For example, autonomous vehicle projects require annotators to focus on maintaining temporal consistency and dynamic tracking of objects.

Infrastructure mapping requires annotators to provide high semantic accuracy and cover all associated static features. Each industry has specific requirements. Professional services can modify their process and quality measures to address these differences.

Precise three-dimensional object labeling of moving scenes is an important LIDAR annotation benefit. Accurate bounding box placement for multi class detection and lane features mapped at a sub meter level are also crucial.

Here’s how specialized LiDAR annotation service providers deliver value:

- Understanding return intensity patterns, beam divergence characteristics and point density variations that occur over distance across various LIDAR sensors.

- Ability to maintain cross frame object ID consistency through complete occlusion events and transition of sensor field of view between sequential data.

- Skills related to normalization of ground planes. Considering vehicle pitch, roll and changes in road surface to create a stable vertical reference frame.

- Disambiguating first, intermediate and last returns on semitransparent objects such as vegetation or chain-link fence objects.

- Creating precise transformation matrices to transform annotations between vehicle body frames, sensor frames and global coordinate systems.

The ability to iteratively improve client pipeline performance enables clients to use their model performance metrics to refine their annotations.

Future of LiDAR annotation in AI development

The addition of temporal dimensions to 3D LiDAR with four-dimensional LiDAR captures velocity information for each point, therefore spatio temporal annotations must contain both motion vector information. This requires new annotation primitives that reason about motion as primary properties.

This market is growing rapidly due to 4D advancements, with an estimated compound annual growth rate (CAGR) of 15.7% over the next ten years.

Human annotators will be assisted by automated AI based tools. Deep learning models produce the initial label which are then verified, corrected and refined by humans. The expansion of the demand from areas such as defense, logistics and construction expand the number of use cases for LiDAR data annotation.

The evolution of machine learning requires continuous improvement of the annotation schema for transformer architecture and multitask learning.

Conclusion

LIDAR data annotation is what we use to transform unstructured point cloud data into structured data. This enables AI perception systems to understand their environment through structure and spatial relationships. Many applications such as autonomous vehicles and robotics rely on the ability of these perception systems to accurately interpret spatially precise data.

The challenges associated with the high-dimensional data, occlusion and temporal consistency require expertise in both technical aspects and quality control to ensure the quality of the data created through annotation.

Accurate and precise annotated data is essential for an AI system’s ability to understand its environment and function reliably. Therefore, organizations should hire or partner with qualified annotators with established and effective work flows that provide quality annotations based on the organization’s expectations for model performance.

End-to-end LiDAR annotation for perception-driven AI models

Label vehicles, pedestrians, and terrain with confidence.