- Data annotation is crucial for training AI models, ensuring labeled, accurate, and scalable datasets that directly affect performance and reliability.

- Effective strategies, such as defining clear objectives, prioritizing quality, optimizing workflows, and leveraging outsourcing, enhance the efficiency and scalability of annotation processes.

- Companies face challenges like managing large datasets and maintaining quality, but emerging trends like self-supervised learning and synthetic data are driving innovation in the field.

Table of Contents

- What Is Data Annotation?

- Why Data Annotation Matters in AI Projects

- Why Data Annotation is Critical for Training High-Performing AI Models

- Common Data Annotation Challenges in AI and ML Projects

- Top 5 Data Annotation Strategies to Improve Model Accuracy and Speed

- Emerging Trends in Data Annotation

- Conclusion

What Is Data Annotation?

Data annotation is the process of annotating or labeling text, images, audio, and video, to provide meaning to raw data, so the data is useful for artificial intelligence (AI) and machine learning (ML) systems to learn patterns and generate outcomes. These annotations become the source, also known to as “ground truth” which algorithms use during they are trained, tested, or become familiar to enhance their skills to better perform.

High-quality data annotation services ensure accuracy and consistency, which are essential for building reliable AI systems. If annotation is inaccurate, common behavior for a model is to misinterpret its input data with bad results, inefficient processes, leading to poor decisions. Conversely, if the data is well annotated, it may provide a good basis for learning, allow AI to present reliable outcomes, scalable decisions, and be delivered for business use.

Why Data Annotation Matters in AI Projects

Data annotation is essential to delivering most AI projects and especially those that utilize supervised machine learning. You can think of the concept of supervised machine learning as similar to teaching a child: you hold up an apple and say “apple”, and the child learns to associate the image of an apple with the word. The same is true for AI models; they have to be “shown” examples of data with the correct “answers” or “labels” for them to learn.

Here’s a breakdown of why data annotation matters so much in AI projects:

- The Fuel for Supervised Learning

- Ensuring Accuracy and Performance

- Establishing Ground Truth

- Enabling Pattern Recognition

- Handling Diverse Data Types

- Addressing Edge Cases and Nuances

- Mitigating Bias

- Driving Continuous Improvement

In essence, data annotation transforms raw, unstructured information into structured, machine-readable knowledge. Without this meticulous process, AI models would remain largely unguided, unable to learn effectively, and ultimately, incapable of delivering on their immense potential.

Why Data Annotation is Critical for Training High-Performing AI Models

Every tagged image, transcribed audio file, or annotated text becomes a building block, teaching AI to make decisions that matter. The difference between an average model and a high-performing one lies in the quality of the data annotation. In the hands of experts, data annotation transforms raw information into reliable, scalable and truly intelligent AI solutions.

Quality data annotation has a profound impact on AI models, as it directly influences their accuracy, reliability and performance.

Common Data Annotation Challenges in AI and ML Projects

Building effective AI models relies heavily on accurate and high-quality annotated data. However, the process of data annotation presents various challenges for AI and ML companies, demanding careful planning and execution.

- Managing large datasets: Handling massive datasets can be overwhelming, requiring efficient tools and workflows to organize, store, and process data effectively.

- Reducing bias in annotations: Bias in annotated data can lead to skewed AI models. Mitigating bias requires diverse annotation teams and stringent quality control measures.

- Allocating resources for scalability: As AI projects grow, scaling annotation efforts become crucial. This involves balancing cost, speed, and accuracy while managing resources effectively.

- Ensuring quality and consistency: Maintaining high quality and consistency across large datasets is essential for reliable AI models. This necessitates robust quality assurance processes and clear annotation guidelines.

Overcoming these data annotation challenges is paramount for the success of AI projects. By implementing effective data annotation strategies and leveraging the right tools, companies can streamline their annotation workflows and accelerate their AI initiatives.

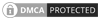

Top 5 Data Annotation Strategies to Improve Model Accuracy and Speed

Data annotation isn’t just a step in your AI project; it’s the foundation that determines success or failure. It’s not about working harder; it’s about working smarter. A well-defined data annotation strategy is the blueprint for success, highlighting the importance of data annotation in transforming chaos into clarity and inefficiency into precision.

Let’s explore the strategies that take your data from ordinary to extraordinary.

Strategy 1: Set Clear, Measurable Goals for Data Annotation

Clearly defining annotation objectives is the foundation of a successful data annotation project. When objectives are well structured and aligned with the AI project’s goals, the entire annotation process becomes more efficient and accurate.

What to Focus On:

Align Annotation Goals with AI Project Objectives

Ensure that the annotation goals are directly tied to the overarching objectives of your AI project. For example, if your AI model aims to classify images, prioritize labeling categories accurately.

Determine the Type of Annotations Required

Selecting the right annotation type is crucial for your project’s success. Here are the most common annotation types and when to use them:

- Semantic Segmentation: This involves pixel-level annotation to classify each pixel of an image into specific categories. It’s used in applications like autonomous vehicles (identifying roads, pedestrians and vehicles) and medical imaging (segmenting organs or anomalies). While highly detailed, semantic segmentation is time intensive and requires skilled annotators or advanced tools.

- Bounding Boxes: These rectangular boxes are used to identify and localize objects within an image. They are commonly employed in object detection tasks, such as identifying animals in wildlife imagery or detecting products on retail shelves. Bounding boxes are faster to annotate compared to segmentation, but may lack precision around object boundaries.

- Labeling: Labeling is the simplest form of annotation in which entire images or objects within them are tagged with categories or attributes. This is ideal for classification tasks, such as determining whether an image contains a cat or dog. It’s efficient and less resource-intensive, making it suitable for large datasets with simpler objectives.

Choose the type of annotation based on your AI model’s requirements and the complexity of your data. The more complex the annotation type, the higher the level of expertise and time required.

The Importance of Clear Annotation Objectives

- Saves Time, Resources and Prevents Rework: Clear objectives streamline workflow by reducing unnecessary iterations and resource wastage. A well-planned annotation strategy ensures that your efforts align perfectly with your AI model’s needs, avoiding the need for costly corrections later.

- Reduces Ambiguity During the Annotation Process: When the objectives and annotation types are well defined, annotators can work with clarity and precision, minimizing errors and discrepancies in the annotated dataset. This enhances the overall quality and reliability of the data.

Strategy 2: Use the Right Data Annotation Tools and Techniques

Selecting the appropriate tools and techniques for data annotation is essential to maximizing efficiency and ensure high-quality outcomes. The right combination of tools and techniques can accelerate workflows and accommodate project complexities.

Types of Data Annotation Tools

-

Automated Annotation Tools:

- Powered by AI to pre-label data, significantly reducing manual effort.

- Ideal for large datasets or repetitive tasks, such as auto-labeling images.

-

Manual Annotation Tools:

- Provide more control and precision for complex or nuanced annotations.

- Best suited for tasks requiring expert judgment or detailed analysis.

-

Popular Platforms:

- Labelbox: Offers collaborative workflows and robust quality control features.

- Amazon SageMaker Ground Truth: Combines automated labeling with human review for scalable annotation.

Key Data Annotation Techniques

- Semantic Annotation: Labels text or image data to extract meaning essential for NLP and image recognition.

- Polygon Annotation: Outlines irregular objects in images, commonly used in autonomous driving or object segmentation.

- Keypoint Annotation: Identifies specific points, such as facial landmarks or joint positions, for applications like facial recognition or pose estimation.

Using the right tools and data annotation techniques for AI allows you to streamline the annotation process, maintain high-quality standards, and meet the specific demands of your AI project efficiently.

Strategy 3: Prioritize Data Quality and Validation Processes

High-quality data annotation is the foundation for developing successful AI models. Implementing robust quality metrics and validation processes ensures that the annotated data is reliable, consistent, and actionable.

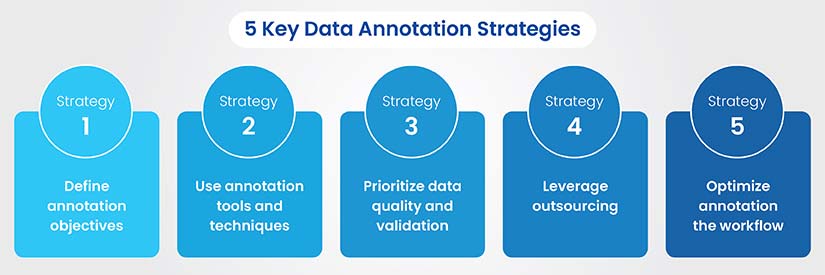

Key Quality Metrics

Key quality metrics for data annotation include accuracy, consistency, precision, recall, F1 score, inter-annotator agreement and error rate. These metrics provide a clear framework for evaluating and enhancing the reliability of annotated data, ensuring robust AI model performance.

- Precision: Measures the proportion of correctly labeled instances among all instances labeled as positive. It evaluates annotation specificity, reducing false positives and ensuring annotations are relevant to the intended task.

- Recall: Assesses the proportion of correctly labeled instances retrieved from all relevant instances. It focuses on reducing false negatives, ensuring critical data points are not missed during annotation.

- F1-Score: Combines precision and recall into a single metric, representing the harmonic mean. It balances specificity and completeness, providing a comprehensive measure of annotation quality.

- Accuracy: Calculates the proportion of correct annotations compared to the total dataset. It provides a straightforward measure of overall annotation performance, reflecting both correct and incorrect labels.

- Inter-Annotator Agreement (IAA): Evaluates consistency among annotators labeling the same dataset. It ensures reliability by measuring how closely annotators agree using metrics like Cohen’s Kappa or Fleiss’ Kappa.

Common Quality Control Measures

- Double Annotation: Assign the same task to multiple annotators, compare results, and resolve inconsistencies to achieve higher accuracy.

- Expert Review: Engage domain specialists to validate annotations, especially for complex or high-stakes projects.

- Quality Sampling: Regularly audit a subset of annotated data to identify and correct systemic errors.

Impact of Quality Data Annotation on AI Models

- Improves Model Performance: Accurate annotations enhance model predictions and minimize errors during deployment.

- Reduces Bias and Errors: High-quality data eliminates noise and incorrect patterns, leading to fair and robust models.

Prioritizing data quality through rigorous validation processes ensures your AI models are trained on reliable datasets, paving the way for accurate predictions and long-term success.

Strategy 4: Leverage Outsourcing

Outsourcing data annotation services can be a game changer for projects requiring scalability, cost-efficiency, and expertise. By partnering with specialized vendors, businesses can focus on core activities while ensuring high-quality data annotation.

Why Outsource Data Annotation Services?

Access to Skilled Annotators:

- Outsourcing grants access to a pool of professionals adept at applying a variety of annotation methods, including text tagging, image labeling, semantic segmentation and keypoint annotation.

- These experts often specialize in domain-specific requirements, ensuring high-quality outputs tailored to industries such as healthcare, automotive, retail and more.

Cost Advantages:

- Reduces expenses associated with hiring, training and maintaining an in-house team.

- Vendors often operate in regions with lower labor costs, translating to financial savings.

Scalability:

- External providers can quickly scale resources to match project requirements, accommodating surges in data volume.

By leveraging outsourcing, businesses can streamline their data annotation workflows, reduce costs, and achieve high-quality results without diverting in-house resources from critical tasks.

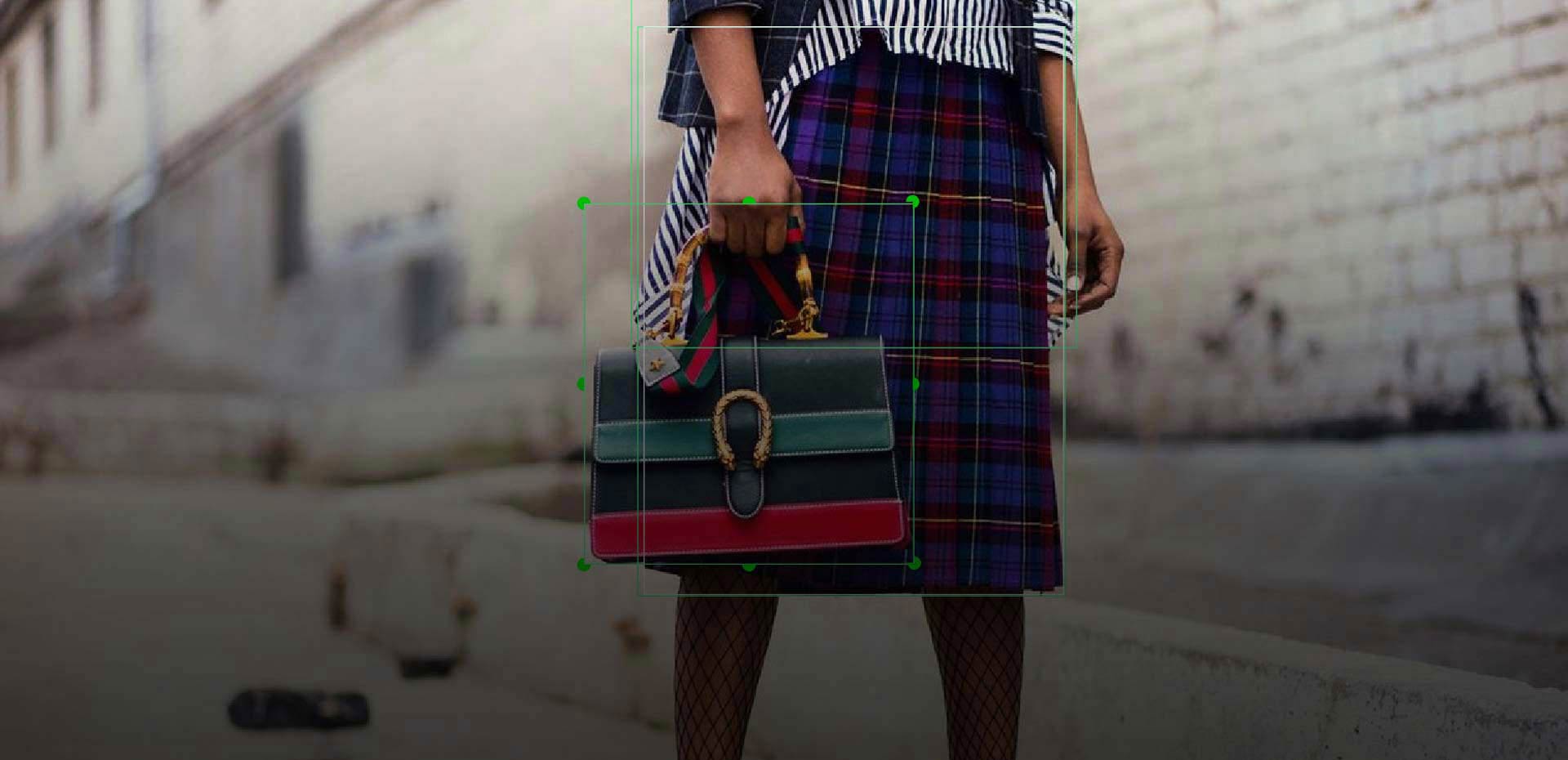

1.2M+ accurately annotated fashion images fuel high performing AI/ML model for Californian technology company

A California-based retail AI firm needed to rapidly annotate 1.2 million images for their AI/ML models. HitechDigital delivered high-quality annotations within 12 days, achieving a 96% increase in annotation productivity. This enabled the client to train more accurate models for inventory management, product recommendations and enhanced customer experiences.

Read full Case Study »Strategy 5: Optimize the Annotation Workflow

An optimized annotation workflow ensures efficiency, reduces bottlenecks, and delivers consistent, high-quality data. Streamlining each phase of the annotation process can significantly enhance productivity and accuracy.

Steps for Workflow Optimization

Pre-Annotation Steps:

- Curate Clean and Diverse Datasets: Remove duplicates, irrelevant data, and inconsistencies to ensure that only valuable data is annotated. Diverse datasets improve model robustness.

- Leverage AI-Assisted Pre-Labeling: Use automated tools to pre-label data, allowing annotators to focus on refining and validating the results. This approach accelerates the annotation process.

- Set Realistic Timelines: Break down the workflow into manageable milestones, enabling iterative improvements and accommodating unforeseen challenges.

Monitoring and Feedback Loops

-

Iterative Feedback to Refine Annotations:

- Regularly review annotations to identify errors or inconsistencies.

- Provide detailed feedback to annotators to ensure continuous learning and improvement.

-

Alignment Between Annotators and AI Developers:

- Foster collaboration to ensure annotations align with the specific needs of AI models.

- Developers should communicate the model’s requirements, and annotators should clarify uncertainties early on.

Benefits of Workflow Optimization

- Improved Efficiency: A streamlined process reduces time and resource wastage.

- Higher Accuracy: Continuous monitoring and feedback ensure annotations are precise and consistent.

- Scalability: Optimized workflows can handle larger datasets without compromising quality.

By focusing on workflow optimization, AI and ML companies can achieve faster project completion while maintaining the high standards necessary for effective model training.

Emerging Trends in Data Annotation

Data annotation for AI is advancing swiftly, driven by emerging trends that are redefining the future of data annotation.

- Self-supervised learning reduces dependency on extensive manual annotation by enabling models to learn patterns from unlabeled data, streamlining the annotation process.

- Synthetic data provides scalable and diverse datasets while minimizing costs and ethical concerns.

- Generative AI tools help in augmenting datasets, offering innovative ways to create labeled data efficiently and accurately.

Together, these advancements are driving higher-quality outcomes, faster processing, and cost-effective solutions, positioning data annotation at the forefront of machine-learning innovation.

Conclusion

In the fast-paced world of AI development, effective data annotation is the bridge between raw data and intelligent systems. By implementing strategies like clear guidelines, advanced tools, hybrid approaches, and rigorous quality control, organizations can build datasets that power accurate and reliable AI models. As data annotation evolves with advancements such as AI-assisted techniques and scalable platforms, opportunities to streamline processes and enhance outcomes continue to grow.

Prioritizing these strategies not only accelerates project timelines but also ensures a competitive edge in delivering impactful AI solutions. The future of AI lies in the quality of its data, and mastering annotation practices today will define the innovations of tomorrow.

Looking for reliable data annotation services?

Discover how our data annotation experts can streamline AI projects with precision.