- Object detection in computer vision has significantly evolved over the last two decades, becoming essential for many applications like image segmentation and tracking.

- With the advent of deep learning networks and powerful GPUs, object detectors and trackers have become more efficient, leading to breakthroughs in the field.

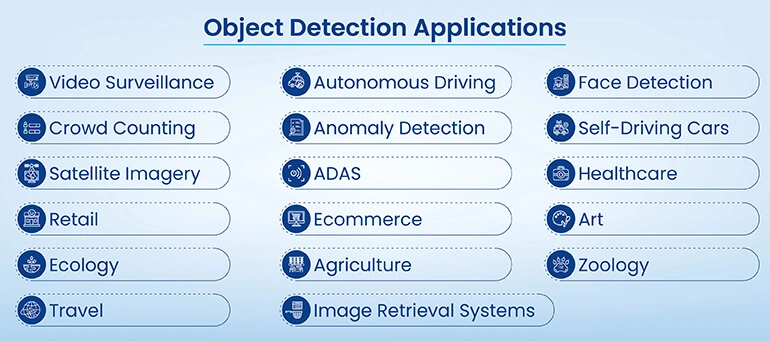

- Object detection shows the versatility and importance across a wide range of applications in retail, autonomous driving, and agriculture industries.

Table of Contents

- What is object detection?

- How object detection works

- Why deep learning is essential for object detection

- Training Data for Object Detection

- Key components of a high-quality object detection

- Applications of object detection

- Top 7 object detection challenges

- How to get started with object detection

- Conclusion

The object detection market is poised for huge growth driven by increasing advancements in AI and demand across various industries. According to published reports, the global object detection market will reach a value of USD 163.75 billion by 2032.

Object detection is both the technique and goal of complex image annotation used in crucial tasks like vehicle counting, activity recognition, and face detection, among others. Current object detection technologies can achieve detection accuracies above 90%.

In the retail industry, object detection aids in inventory management, detecting out-of-stock items, and automating restocking processes. In the medical field, it assists in computer-aided diagnosis, and overcoming challenges such as low resolution, high noise, and small object detection.

We take this opportunity to take you through everything that one should know about best object detection.

What is object detection?

Object detection is a computer vision technique that identifies and locates objects within images or video frames. This technology has become increasingly crucial in autonomous driving, surveillance, robotics, and many more. With advancements in AI and the increasing demand for object detection services, new opportunities are springing up in this field.

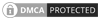

As an image annotation technique, object detection focuses on two tasks: object classification and object localization. First, it identifies what the object is and then locates the object using bounding boxes.

Say, for example, you need to analyze traffic on a busy highway. Now, here, image recognition would classify the whole thing as a traffic scene. And then it is object detection that would separately identify each car, person, bike, etc., using bounding boxes as ‘car’, ‘person’, or ‘bike’.

In AI and machine learning, object detection powers various real-world applications, such as real-time decision making for self-driving cars. It helps detect lanes, signals, or any kind of obstacles. Other industries where it is used include retail for inventory tracking, medical imaging to locate tumours or anomalies in scans, agriculture for crop monitoring, and so on.

To understand in simple terms, object recognition identifies what is in the image, while object detection identifies the objects using bounding boxes.

Here is a comparison between the two:

| Feature | Object Recognition | Object Detection |

|---|---|---|

| Primary Goal | Identify main object | Identify and locate multiple objects |

| Output | Single class label | Class label + bounding boxes |

| Localization | Not provided | Yes (bounding boxes) |

| No. of Objects | Typically, one | Multiple objects per image |

How object detection works

Object detection goes beyond simply identifying what’s in an image, it also pinpoints exactly where each object appears. By combining machine learning, deep learning, and computer vision, these systems learn to recognize objects through thousands of labelled examples. Once trained, they can detect and classify new objects in real-world images or videos with speed and accuracy.

Here is the step-by-step process

1 Image Input – A digital image or video frame is fed into the model.

2 Feature Extraction – The model identifies the textures, shapes, or edges needed to identify objects. Convolutional Neural Networks (CNNs) are used by models to recognize objects.

3 Region Proposal – In some object detection techniques, such as two-stage detectors (like R-CNN and Faster R-CNN), the models work in two parts. First, a region is identified that is likely to contain objects. And then these regions are classified. This helps as the search space for objects is narrowed down.

4 Single Stage Detectors – In such a case, detectors like YOLO, SSD, etc. simultaneously predict bounding boxes and class labels across the entire image. This is a faster process, but maybe less precise.

5 Classification and Localization – Each region (or the full image in single-shot models) is classified into an object category (like car, dog, person, etc.) and given a bounding box to show its location.

6 Post Processing – Once the predictions are done, post-processing steps like ‘Non-Maximum Suppression’ (NMS), ‘Thresholding’ and ‘Bounding Box Regression Refinement’ are used to refine the results.

Various object detection techniques are used throughout the entire process. Here, we list a few of the most important ones for better clarity.

Traditional Techniques (Pre-Deep Learning) – These are based on handcrafted features and classical machine learning algorithms.

- Haar Cascades – Used for face detection; lightweight and fast but limited in complex scenes.

- Histogram of Oriented Gradients + Support Vector Machine (HOG + SVM) – Uses edge detection and Support Vector Machines for pedestrian and object detection.

- Scale-Invariant Feature Transform (SIFT) and Speeded Up Robust Features (SURF) – Used for object recognition and localization through feature matching.

Deep Learning-Based Techniques – These are advanced techniques that use neural networks with flexibility and better accuracy.

Two-Stage Detectors – They generate region proposals first in the image that may contain objects and then classify them. They work very well in scenarios like medical imaging, document analysis, etc., as they provide high precision.

Some of them include:

- R-CNN: Uses CNN on region proposals with SVM classifier

- Fast R-CNN: Extracts shared features, then classifies each region

- Faster R-CNN: Adds a region proposal network for faster detection

- Mask R-CNN: Extends Faster R-CNN with object segmentation masks

- Cascade R-CNN: Refines detections in stages for better accuracy

- R-FCN: Fully convolutional with position-sensitive scoring maps

One-Stage Detectors – These work well for real-time applications as they predict bounding box and class labels in a single step. They are mostly used for applications for autonomous driving and surveillance.

Some of the detectors include:

- YOLO Series: Single-pass detection using grid-based predictions

- SSD: Multi-scale feature maps for varied object size

- RetinaNet: Uses focal loss to handle class imbalance

- EfficientDet: Balances accuracy and efficiency with compound scaling

- CenterNet: Anchor-free, predicts object center and size

- DETR: Transformer-based parallel detection without anchor boxes

Why deep learning is essential for object detection

Before we understand why deep learning is important for object detection, let us first understand deep learning. What does this mean?

In other words, deep learning simulates the complex decision-making power of the human brain. We can call it a subset of machine learning; both deep learning and machine learning are branches of AI. It is trained to solve complex problems and make accurate predictions, and today, deep learning powers many AI applications including object detection in image processing and video object detection.

Now, let us go a bit further and understand why deep learning is essential for object detection. Compared to traditional methods, the automated feature extraction of deep learning makes the system more adaptable.

These features help with complex detection and can detect objects with high accuracy even around varying lightings, clutter, and partial occlusion. Most importantly, real-time object detection capabilities are a great support for projects like self-driving vehicles.

Deep learning integrates seamlessly with advanced techniques like instance segmentation, semantic segmentation, and 3D object detection, helping a holistic understanding of objects.

The Future of Data Annotation: Key Trends and Innovations

- Industries globally are impacted by advancements in annotation.

- Smart tools and techniques enhance the performance of ML models.

- Synthetic data and multimodal annotation are trends that will dominate.

- Ethical data annotation practices ensure responsible AI development.

Training data for object detection

Deep learning models depend on the quality, quantity, and annotation accuracy of training data. First, you figure out the objects you want your model to detect, and then the data set is prepared using examples of the same objects.

The model learns through images and detailed annotations provided in the training dataset. This helps it develop a clear understanding of what objects are present and where they are located, making it foundational for object detection in image processing.

To put it in simple terms, training data for object detection includes images with multiple objects, bounding boxes marking object locations, and class labels that identify object types. This approach also extends to video object detection, where the model performs detection across continuous frames crucial for applications like surveillance and smart vehicles.

When optimized for speed and performance, these models can also enable real-time object detection, allowing systems to respond instantly in dynamic environments.

Key components of a high-quality object detection dataset

Key components of a high-quality object data set include:

- Accurate Annotations (Ground Truth) – Every object in the image should be labeled precisely and consistently, so the model learns from complete, correct, and clearly defined examples, even when objects are partially hidden or cropped.

- Diversity and Representativeness of Images – Your dataset should reflect the real world, capturing objects in different scenes, sizes, lighting conditions, poses, and backgrounds to help the model perform well in varied environments.

- Sufficient Data Quantity – The more diverse and well-balanced data you provide across all object classes, the better your model can understand complex patterns and avoid being biased toward frequently seen items.

- Clear Annotation Guidelines and Quality Control – Providing clear instructions, properly training annotators, and reviewing their work helps maintain consistent, high-quality labels across your dataset.

- Appropriate Data Format and Organization – Using standard formats and a clean file structure ensures smooth integration with object detection tools and makes managing the dataset easier.

- Addressing Class Imbalance – If some object classes are underrepresented, applying smart balancing techniques can prevent the model from ignoring them during training.

- Data Splitting for Training, Validation, and Testing – Carefully separating your data into training, validation, and test sets without any overlap helps you accurately measure how well your model will perform in the real world.

Applications of object detection

Object detection is changing the way machines interact with the world around them. Let’s look at how it’s being used in everyday industries.

Intelligent Video Analytics – From monitoring crowds to detecting intruders, object detection powers smarter surveillance systems that respond in real time. It helps security teams analyze footage efficiently by automatically identifying people, objects, or unusual activity.

Autonomous Vehicles – Self-driving cars rely on object detection to recognize pedestrians, vehicles, traffic signs, and obstacles on the road. This real-time awareness is key to making safe navigation decisions and preventing accidents.

Defect Inspection in Manufacturing – Object detection is used on production lines to identify product defects, missing components, or irregularities instantly. It boosts quality control by reducing human error and speeding up the inspection process.

Top 7 object detection challenges

- Class Imbalance – When certain object classes appear far more often than others, models may become biased and struggle to detect underrepresented classes accurately.

- Occlusion and Overlapping Objects – Objects that are partially hidden confuse models, making it hard to correctly identify and separate them.

- Varying Lighting and Weather Conditions – Changes in lighting, shadows, or weather can distort how objects appear, affecting detection performance.

- Scale Variation – Objects appear at different sizes depending on the distance or image resolution, and detecting both tiny and large objects in one frame remains a challenge.

- Background Clutter and Similar-Looking Objects – Busy backgrounds or objects with similar shapes and colors can lead to false positives or misclassification.

- Real-Time Processing Constraints – For applications like autonomous driving or live video surveillance, object detection must be both accurate and lightning-fast, which is a tough balance to strike.

- Lack of High-Quality Training Data – Without diverse, well-annotated data, models may not generalize well and could miss important variations in object appearance.

How to get started with object detection

Conclusion

Object detection is a fundamental computer vision task that identifies and classifies objects within images or videos, essential in various applications, like self-driving cars, asset inspection, and video surveillance.

Utilizing convolutional neural networks, such as RetinaNET, YOLO, and SSD, this technology achieves high-speed and accurate detection. Despite challenges such as varying appearances and occlusions, it remains crucial for advancing AI and robotics. Leveraging these techniques can enhance safety, efficiency, and innovation in numerous fields.

Scale your business with our enterprise AI prompt services

Achieve business goals faster using our AI services