- Sectors such as brokerage, lending and investment use real estate data to make decisions regarding investments, lending and brokerage.

- Due to fragmented nature of available information, real estate data must be professionally aggregated, validated and continuously refreshed.

- Clean property value data results in enhanced profitability and efficiency for participants in the brokerage sector.

Table of Contents

- Understanding real estate data ecosystem

- Why high quality real estate data is foundational for decision making

- Key challenges in real estate data acquisition

- How professional real estate data aggregation resolves these challenges

- Real use cases: Where clean property data impacts decision making

- The role of HitechDigital in powering data driven real estate operations

- Collecting real estate data from diverse sources

- Cleansing real estate data to remove duplicates

- Validate real estate data to identify missing data

- Real estate data analysis & reporting for accurate market trends

- Transform real estate data in client specific formats

- Maintaining real estate data to keep it concurrent

- Data products delivered

- How high quality data improves business efficiency and profitability

- Future trends: Where real estate data is heading

- Conclusion

Data driven real estate strategies are now crucial across all sectors of real estate like brokerage, lending, appraisal and investment. The global real estate market will be worth an estimated $7.03 Trillion in 2034, up from approximately $4.58 Trillion in 2026. This represents a huge opportunity space where a little efficiency with data can produce large returns.

There are many forms of real estate data available from public records, multiple listing services (MLS) and geospatial layers. Organizations mostly face difficulty accessing and utilizing these resources due to the lack of a cohesive view of the data.

Professional real estate data services collect raw data, validate it, normalize it, and convert it into actionable intelligence. Real estate data providers leverage this clean property value data across the ecosystem to enable faster underwriting, accurate valuation, and scalable automation.

Understanding real estate data ecosystem

Real estate data sources include property characteristics such as APNs, parcel maps, land uses, deeds and ownership information including grantors/grantees and transfer dates, mortgage and lien data. Market data include MLS listing and comparative data, days on market and comparable sales data.

Some risk related data includes foreclosure filing data, bankruptcy data, probate data. Regulatory data includes NMLS loan originators and zoning data. Real estate data for agents are received through MLS feeds that are updated in near real time. However, it is difficult to normalize the data and ensure the quality of the input.

As of 2025, there are over 10,000 PropTech companies worldwide, so while there is many potential solutions to problems, the industry has a deficiency in high quality, standardizable data inputs.

| Data category | Key components | Primary use cases |

|---|---|---|

| Public records | APN, parcel boundaries, legal descriptions, ownership history, grantor/grantee, mortgage/lien amounts, tax assessments, exemptions | Title research, ownership verification, tax analysis, valuation |

| Market & listing data | MLS listings, DOM, status changes, price adjustments, rental comparables, sales comparables | Pricing strategy, competitive analysis, agent workflows |

| Risk & distress data | Foreclosure filings (NOD, lis pendens, REO), bankruptcy, probate, pre-probate, divorce records, environmental/zoning overlays | Distress investing, lead generation, risk assessment |

| Regulatory data | NMLS loan originator licensing, zoning codes, planning documents, building permits, code violations, certificate of occupancy | Compliance verification, development planning, property usage analysis |

Why high quality real estate data is foundational for decision making

Quality separates valuable information from irrelevant information among all stakeholders. As research shows, poor data quality can have a big financial impact on an organization, with the cost of poor data quality averaging $15 million per year as a result of misdirected analytics and operational inefficiency.

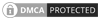

Reducing uncertainty in property valuation

To produce reliable AVM results, the quality of attribute data must be high. Divergent square footage and bedroom count values among county, MLS and assessor databases can reduce the reliability of these values. Through the process of validating quality property value data, lenders and agents may make better informed decisions regarding pricing.

Better underwriting and risk modeling

Mortgage risk scores are dependent upon complete and accurate lien and ownership histories. Title chain gaps lead to delayed underwriting, increased exposure, and potential loss. High-quality data concerning commercial and residential real estate investment data provide the ability to effectively segment portfolios and detect early warning signs.

Driving superior investment decisions

Through the use of analytical techniques, investors can systematically identify undervalued properties. Additionally, by cleaning and analyzing historical real estate data, PropTech platforms can develop more accurate real estate market forecasting.

Improving portfolio management

Once an investor acquires a property, they need to continuously monitor the asset’s lifecycle until it is sold. For example, REITs rely on asset-level information about each property within their portfolios. The automated data pipeline can easily identify underperforming properties and opportunities for rebalancing.

Powering real estate technology platforms

PropTech platforms are reliant on high-quality datasets to support superior user experiences. The accuracy of search results and the completeness of property details directly affect user satisfaction. High quality real estate data for developers focus on innovative features rather than spending time remediating data.

Key challenges in real estate data acquisition

While there are many resources available for obtaining real estate data, the collection of usable information is a challenge that limits the quality of decisions and operational efficiency.

- Fragmented across approximately 3000 counties: Schemas, formats, update cycles and access methods vary by county, as such, jurisdiction-specific extraction workflows must be developed for each county.

- Inconsistent taxonomies: The format used to identify an assessor’s parcel number (APN), zoning codes, property type classifications, etc. can be inconsistent.

- Conflicting datasets: The value placed on properties as recorded by county assessors may differ from what is reflected in MLS pricing data. Square footage and ownership information may be conflicting between counties.

- Manual errors: Typographical errors in deeds, data entry omissions including names, amounts and dates resulting in OCR inaccuracies.

- Latency issues: County systems typically update weekly or monthly, while MLS updates are done in real-time. This creates synchronization challenges and stale datasets.

How professional real estate data aggregation resolves these challenges

Real estate data service providers apply specialized processes to transform raw unorganized source data into a single unified dataset with verified information.

Unified data collection from multiple sources

Using various methods, including automated web scraping, APIs, bulk transfer of records and manually collecting the data, collection systems gather information from a variety of locations. These include county offices, county records departments, mortgage registry files and court documents.

Advanced data cleansing

Data quality assurance is done using format normalization to create consistent data structure. Schema mapping is used to map disparate field names to common field names. Deduplication is applied to identify duplicate records for the same physical property. Zoning code and property type normalization creates common terms for each jurisdiction.

Rigorous data validation

Records between different data collections are cross referenced, checking the logical consistency of the data to validate that no invalid or impossible values exist within the data. Validating the APN, parcel boundaries and GIS data to validate geographic location and verifying ownership chains for any missing titles.

Data enrichment for better decision context

To provide additional context for making decisions about a property, geospatial layers are placed upon demographic data, school district boundaries, and distance based measures. Off-market data is matched with active listings creating a complete picture of the market activity of a property. The degree of this harmony represents what defines a “very transparent” market, which currently attracts over 80% of global real estate investment.

Transformation & structuring for analytics

In order to convert the collected data into an analysis ready state for an application, the data is structured for the intended application use case. Standardized formats such as JSON/XML are created for easy API consumption. Data normalized in a tabular format enables the usage of Business Intelligence (BI) and Machine Learning (ML) tools.

Continuous data refresh & API integration

In order to convert the collected data into an analysis ready state for an application, the data is structured for the intended application use case. Standardized formats such as JSON/XML are created for easy API consumption. Data normalized in a tabular format enables the usage of Business Intelligence (BI) and Machine Learning (ML) tools.

| Data category | Resolution approach | Outcome |

|---|---|---|

| Fragmented sources across 3,000+ counties | Multi-channel extraction (APIs, bulk files, manual) | Unified national coverage |

| Inconsistent schemas and missing fields | Format standardization and schema alignment | Consistent data structure |

| Duplicate/conflicting records | Entity resolution and cross-source validation | Single source of truth |

| Manual errors and OCR inaccuracies | Logical consistency checks and field-level correction | Improved accuracy |

| Update frequency mismatches | Automated refresh pipelines with real-time MLS | Current, synchronized data |

Real use cases: Where clean property data impacts decision making

Quality of data can be shown as a method for achieving successful results in the day to day operations of many businesses in the real estate industry.

Real estate investors & wholesalers

The quality of the data is crucial when attempting to generate leads on properties that are in the probate or foreclosure process. This helps identify the distressed properties accurately so they can move through their due diligence quickly.

Brokerages & MLS platforms

When property attributes match assessor records, this provides accurate information to buyers regarding the property being listed for sale. Accurate ownership details are essential to ensure authorized parties are listed. Validated real estate data for agents ensures they have the confidence to represent properties accurately.

Appraisers & valuation modelers

Enriched comparable sets enhance automated valuation models (AVM) performance. Verification of transaction details and quality of property attributes provides alignment between automated models and appraised values.

The role of HitechDigital in powering data driven real estate operations

HitechDigital delivers reliable real estate data service for the collection, verification and use of real estate intelligence by businesses during their entire cycle of data.

Collecting real estate data from diverse sources

Our real estate data collection is done through a variety of sources such as MLS platforms, county systems, recorder offices, etc. utilizing customized workflows. The automation and manual verification of data collected from these sources assist in providing the highest level of accuracy regardless of jurisdiction.

Cleansing real estate data to remove duplicates

We employ advanced de-duplication algorithms to eliminate redundant records within various data sources to deliver clean and accurate data. Attribute normalization ensures that all data is standardized to a common format and coding scheme. Also, field level corrections are utilized to correct OCR errors and inconsistencies in a systematic way to ensure that all data is standardized.

Validate real estate data to identify missing data

Validation of real estate data occurs through cross-validation between datasets to identify potential conflicts which would require resolution prior to delivery of data. HitechDigital also utilizes chain of title verification to verify the history of ownership for potential gaps or irregularities.

Real estate data analysis & reporting for accurate market trends

Market trend modeling uses historical cleansed data to accurately model trends within markets. WE also offer asset level and portfolio level reporting to offer customizable views for varying stakeholders. Custom BI-ready datasets are available for optimizing the input to specified analytical tools and allowing real estate market forecasting to rely on properly structured inputs.

Transform real estate data in client specific formats

Format conversion turn the data from the original source into the required format of the client systematically. API ready feeds allow clients to ingest the data into their systems and platforms in real time. The normalized datasets also ensure compatibility with machine learning models and analytic tools for seamless integration into client workflows.

Maintaining real estate data to keep it concurrent

The recurring updates of real estate data are performed using scheduled updates or trigger-based models to ensure current data. Continuous ingestion of MLS data captures listing changes as they occur. Incremental refreshes of county data capture new filings and additions to existing filings in an efficient manner without replacing the entire dataset.

Data products delivered

MLS real estate data represents a comprehensive suite of listing intelligence for participants in the real estate market. Foreclosure and mortgage datasets represent a valuable resource for those involved in distress investing and lending risk management. Additionally, NMLS loan originator data i facilitate compliance verification and professional background checks. Real estate data for developers represents zoning codes and permits for planning purposes related to development.

Scalable Aggregation of 7M+ Property Records for a Real Estate Investment Firm

A U.S. real estate investment and management company was struggling to process a large number of property documents on a regular basis, as well as manually extract data from the documents from multiple county clerk offices and maintain a current, correct and reliable database.

HitechDigital created a structured end to end workflow for collecting, validating and importing millions of property records each day into both a client portal and Microsoft Excel templates. We created an efficient scalable solution that could be flexible and cost-effective for them.

The end results were:

- Aggregation of 7M+ property records and ongoing updates

- Cutting down TAT from 3–4 days to 24 hours

- Cost savings through an efficient outsourcing model

How high quality data improves business efficiency and profitability

Clean Data has a direct impact on both operational metrics and financial performance beyond making better decisions.

- Less operational expense: Reduced manual verification time, less compliance errors and faster underwriting cycle.

- More revenue opportunities: More targeted leads result in increased conversion rates, more accurate property valuations means more transactions can be completed.

- Scale through automation: Using API based insights to develop digital products, machine learning model development for predictions, automated alerts allow for timely response

- Increase user trust: Retention as a platform increases the LTV of users reduces the number of transaction disputes that damage reputation.

Future trends: Where real estate data is heading

Predictive models that use AI will help forecast distress probability and trajectories of value. Trends in real estate data suggest a movement toward anticipatory systems with machine learning.

Some of the key developing technologies that are likely to shape the future include:

- AI powered property intelligence: AI models trained on large datasets will anticipate and identify potential distress events, rental performance and changes in property values.

- Blockchain technology for immutable records: Blockchain is distributed ledger technology that may be used to establish permanent and verifiable records of ownership history and transparent transaction trails to reduce title insurance costs and streamline closings. The projected CAGR for the blockchain real estate market is 64.8%.

- Real time data exchanges: Systems that link MLS platforms, county recorder offices, zoning authority offices and lender databases will allow for instantaneous updates across all systems, eliminating the time lag created.

The new innovations will change how organizations collect, verify and use property intelligence to achieve a competitive advantage.

Conclusion

Quality real estate data is the foundation of each business decision. It has been a long time since we have transitioned from strategic to data driven and those who do not have high quality validated data will be at a competitive disadvantage.

Speed and accuracy are key determinants of success. Organizations that partner with companies specializing in providing these services will receive an infrastructure that provides the necessary property intelligence to make better informed decisions, improve operations and produce profitable results.

Fix errors and gaps in large real estate data repositories

Prepare clean datasets ready for analytics and modeling