- AI model performance hinges on high-quality training data; outsourcing ensures consistency, domain expertise, and scalability across complex, diverse datasets.

- Data preparation outsourcing accelerates time-to-market, supports rapid scaling, and enables internal teams to focus on model innovation and deployment.

- Choosing the right partner involves assessing expertise, compliance, infrastructure, and adaptability to ensure long-term value and strategic AI alignment.

Table of Contents

- Understanding the data preparation bottleneck

- 7 things AI and ML teams should know about outsourcing data preparation process

- 1. High-quality training datasets determine the success of your AI and ML models

- 2. Outsourcing data preparation offers scalability and speed

- 3. Specialized expertise is crucial

- 4. Data security & compliance cannot be compromised

- 5. Measuring ROI and long-term partnership value

- 6. Customization is key to data preparation

- 7. Making the right choice: Evaluating outsourcing partners

- Conclusion

Artificial intelligence (AI) has been making waves across a wide range of industries. Players in these sectors are leveraging AI to streamline operations to new levels of efficiency and gain deeper customer insights. However, performing AI-powered applications depends on an often-downplayed ingredient: high-quality training datasets. Whether you want to power generative AI, large language models (LLMs), computer vision systems, or chatbots, AI data preparation remains the key to model accuracy, reliability, and scalability.

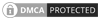

The global AI training dataset market revenue reached USD 2.3 billion in 2023, and the upward trajectory, until 2032, promises a revenue growth of USD 11.7 billion, with text datasets contributing USD 4.42 billion. Image and video datasets will grow to USD 3.79 billion, and audio datasets will increase to USD 3.49 billion.

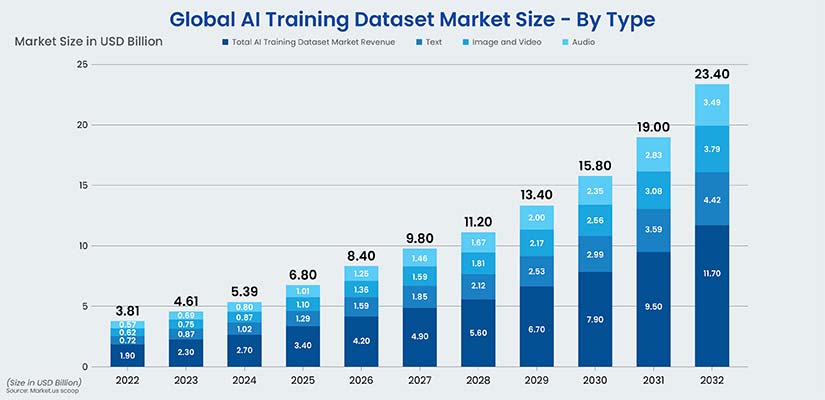

Source: scoop.market.usThis forecast underpins the increasing demand and need to invest in AI training datasets across automotive, retail and eCommerce, healthcare, BFSI, and several other industries.

Growing sophistication of AI applications has increased the volume, variety, and velocity of required training datasets. Training a single LLM might need hundreds of terabytes of structured and unstructured data. Training a computer vision model would require millions of annotated images to perform reliably in real-world scenarios. This surge makes data preparation a complex, time-consuming, and resource-intensive task. Unfortunately, budget constraints do not permit players in these industries to have in-house data annotation and labeling teams.

So, what is the first step for them to capitalize on new possibilities with artificial intelligence? The answer lies in outsourcing data preparation services to get hold of precisely annotated training datasets to train their AI and ML models.

The next question is: How does anyone go about finding the best outsourcing company for annotation and labeling data preparation? So, in this blog, we will offer a comprehensive guide on how to find the right outsourcing data preparation service partner. We will also discuss why you should outsource work to AI development services rather than trying it in-house. Let us start by understanding the data preparation challenges.

Understanding the data preparation bottleneck

AI and ML teams know how accurately labeled training datasets are crucial for AI success. However, the data preparation activity is still a bottleneck for them. It all starts with the immense volume and variety of data types, right from high-resolution image and video files, audio and speech recordings, extensive text corpora, intricate sensor data, to increasingly prevalent synthetic datasets required for modern AI applications.

Preparing diverse datasets for training AI and ML models requires data collection, cleansing, labeling, moderation, validation, and structuring. Each of these tasks needs domain-specific expertise and experience. It also requires advanced tools and infrastructure, which are often not available with in-house AI teams.

AI and ML models trained using inconsistent, noisy, or biased data lead to low accuracy, poor generalization, and fairness issues. This makes the models unreliable in real-world applications. High-stakes industries like healthcare, autonomous vehicles, or financial services, where consequences of failure are severe, cannot afford such end results.

On the other hand, flawed training datasets lead to extended development timelines, inflated costs, and hamper the scalability of advanced AI applications like Generative AI, Large Language Models (LLMs), and Computer Vision systems.

Source: scoop.market.usAfter realizing these bottlenecks, many of these companies have started outsourcing data preparation for annotation as a strategic solution.

Partnering with specialized data preparation service providers helps them offload the heavy lifting of data preparation activities. They also benefit by tapping into domain expertise while relocating internal resources toward model innovation, deployment, and optimization. All these put together speed up time to market and enhance AI ROI.

7 things AI and ML teams should know about outsourcing data preparation process

Now that we know how critical high-quality training data for the success of your AI and ML models is, and also which are the bottlenecks of the in-house data preparation approach, here we highlight the seven most important things you should know about the outsourcing data preparation process and why it is so critical.

1. High-quality training datasets determine the success of your AI and ML models

The success and failure of the data hungry AI and ML models hinges on high-quality training datasets. Even the most sophisticated algorithms will underperform if trained using low-quality or flawed data. Inaccurate, unstructured, mislabeled, or biased data will certainly lead to reduced accuracy, biased model outputs, and massive real-world failures.

In the healthcare industry, if an AI-powered diagnostic tool is trained on unverified data, it will produce life-threatening outcomes. Similarly, in the automotive sector, poor image labeling will impair object detection in autonomous systems.

The need for clean, annotated, and well-structured data is even more pressing for use in LLM fine-tuning, generative AI, and virtual assistants.

| AI Application | Data Requirement |

|---|---|

| Large Language Models (LLMs) | Balanced, context-rich textual datasets to generate coherent and unbiased responses. |

| Generative AI | Precisely labeled and curated data to produce high-fidelity outputs. |

| Chatbots & Virtual Assistants | Structured, domain-specific dialogue data for meaningful and accurate interactions. |

Outsourcing to specialized data service providers offers a strategic path to achieving this high level of quality. Providers with domain-specific expertise understand the contextual nuances, regulatory requirements, and data sensitivities unique to each industry.

As part of data preparation activity, HitechDigital collected, standardized, and verified multilingual, unstructured news articles from online sources for a German construction company. A fine blend of automation and human supervision by trained annotators ensured accurate classification of complex construction information and accurate labeling of that information for client’s LLM model training.

Effective data preparation for machine learning involves several critical steps: data cleansing to remove inconsistencies and errors, annotation and labeling to ensure accurate tagging, and validation and verification to confirm dataset quality. This integrated approach improves model reliability, reduces risks, and speeds up deployment.

2. Outsourcing data preparation offers scalability and speed

The volume and diversity of training data required is proportionate to the complexity of the AI project. Tasks ranging from collecting millions of image samples for computer vision models, generating synthetic speech data for virtual assistants, or annotating vast text datasets for LLM fine-tuning require the bandwidth and infrastructure to move fast without compromising quality, which in-house teams always lack. Speed and scalability are constant challenges for AI and ML teams working with ambitious deployment goals and, of course, tight deadlines. The situation aggravates when they solely depend on in-house data teams.

AI data preparation outsourcing addresses these challenges head-on by giving their clients access to on-demand, expert-led scalability. These service providers offer the capability to ramp up data labeling and collection across multiple languages, domains and data types. Their AI Data Collection service offerings ensures rapid, targeted gathering of image, video, audio, or sensor data. And the legacy does not stop here.

For a Swiss client’s food waste assessment project, thousands of images annotated at speed, fueled machine learning models and fast-tracked food waste analysis. HitechDigital prepared image data for annotation by organizing and standardizing thousands of visuals. It was backed by training annotators on European food items and establishing a structured labeling and QA workflow to ensure consistency, accuracy, and model-ready annotated data.

Synthetic data generation services are designed to fill gaps in underrepresented data scenarios to build robust and diverse training datasets. It is backed by high-accuracy data annotation services with faster turnaround without sacrificing precision or compliance.

The result? Rather than being bogged down by time-consuming data preparation activities, you fast track your model training cycles, reduce time to market, and leverage your internal sources for model deployment and further innovation. The artificial intelligence arena is all about speed that translates into competitive advantage, and outsourcing data preparation is a strategic enabler for profitable growth and scalability.

3. Specialized expertise is crucial

As we discussed, the performance of AI models is not just about volumes; instead, attributes like precision, context, and domain expertise also play a critical role. Medical AI applications, facial recognition, speech recognition, and several such applications demand experienced data annotators with proven expertise. In-house AI teams may not have hands-on experience and niche expertise, leading to inaccuracies negatively impacting the trustworthiness and overall model performance.

This is exactly where AI and ML companies realize the ROI of their outsourcing decisions. Established data preparation service providers have trained annotators and domain specialists on board, well versed in the nuances of diverse domains right from HIPAA-compliant workflows in healthcare, multilingual capabilities for global voice assistants, or taxonomy alignment for retail product categorization. It is time to understand that labeling sensor data in automotive applications or annotating clinical text in healthcare requires not only technical skills but also industry-specific knowledge that ensures data accuracy, relevance, and compliance.

In-house teams struggle to acquire hands-on experience using advanced annotation tools and collaborative platforms and derive robust QA workflows for consistent quality across large-scale projects. But that’s not the case with third-party data preparation service providers, as they are experts at handling complex data types that demand specialization. They deliver Audio and Speech Data Annotation for multilingual NLP and ASR systems, Sensor Data Annotation for automotive and IoT applications, and Text Data Annotation that aligns with industry-specific language models.

Tapping into this expertise not only helps you gain speed but also provides depth, accuracy, and compliance crucial to the success of your AI model when deployed.

4. Data security & compliance cannot be compromised

Data security and compliance are non-negotiable when outsourcing data preparation for healthcare diagnostics or financial services. The question: How to maintain quality control in outsourced data preparation, haunts every AI development team. While leveraging the data, they also have to safeguard personally identifiable information (PII), medical records, and confidential user data against breaches and misuse.

Outsourcing partners with years of experience are usually compliant with international standards, such as HIPAA (for healthcare data), GDPR (for data privacy), and ISO 27001 (for information security management). This is an assurance that data is handled securely and processed in line with global regulatory requirements.

You can vet the outsourced data preparation service provider using this checklist:

- Do they offer secure data transfer and storage protocols?

- Are team members trained in compliance?

- Do they conduct regular audits and risk assessments?

5. Measuring ROI and long-term partnership value

The good thing is that organizations no longer look at outsourcing data preparation as a short-term fix or a one-time activity. Instead, they now consider it a smarter move and a strategic long-term investment. This has led to a situation in which they measure the success of outsourcing activity in model performance gains, higher annotation accuracy, and accelerated time-to-market.

So how to calculate the ROI of outsourced data preparation projects?

Calculating the ROI of outsourced data preparation projects involves analyzing both costs and benefits to determine the financial impact and effectiveness of the outsourcing decision.

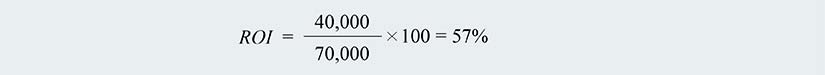

The basic formula for calculating ROI is:

Practical Example: Suppose a company spends $40,000 on outsourcing image annotation, and the resulting improvements in efficiency and quality lead to an additional $70,000 in revenue. The net profit would be $70,000 (revenue) minus $40,000 (investment), equal to $30,000. Using the formula:

This means that the company achieved a 57% return on its investment in outsourcing.

To calculate ROI accurately, consider all associated costs, such as vendor fees, communication expenses, and any internal costs related to managing the outsourcing process. Also, do not forget to factor in benefits like increased revenue and cost savings from reduced in-house operations.

Leading data preparation service provider companies support clients across the entire data lifecycle, from data collection and annotation to cleansing, synthetic data generation, and validation. They don’t just deliver data. They help you shape a repeatable, scalable framework for success. Whether you’re fine-tuning a foundation model or launching a real-time chatbot, they’re there to support you grow profitably.

6. Customization is key to data preparation

In data preparation for AI development, the one-size-fits-all approach fails miserably. It may be an LLM powering a financial advisor chatbot or a computer vision system for autonomous vehicles. However, its success is always dependent on customized data preparation.

Outsourced arrangements are known for delivering services tailored to their clients’ domains, including industry-specific taxonomies, edge-case annotation protocols, bespoke labeling criteria, and context-aware moderation rules. In the absence of this level of precision, AI models risk drifting from real-world expectations and user needs.

These third-party data preparation service providers leverage smartly designed processes to deliver customized data structuring for LLM Fine-Tuning to ensure that the model is aligned with domain intent, style, and compliance standards. Their data moderation services adapt to brand safety thresholds, and synthetic data augmentation solutions are tailored to bridge data gaps in high-risk categories.

7. Making the right choice: Evaluating outsourcing partners

Shortlisting the right data preparation partner is a critical step for your AI initiative to succeed. The ideal partner should bring to the table capacity along with quality, scalability, security, customization, and domain expertise.

| Step | Action Items | What to Look For |

|---|---|---|

| Define Requirements |

Be clear about what you expect from the outsourcing company:

|

Clarity on project scope and performance metrics |

| Research Vendors |

Identify vendors with relevant domain expertise:

|

Industry-specific experience and successful track record |

| Request and Compare Proposals |

Request detailed service proposals across key offerings:

|

|

| Run Pilot or POC |

|

|

| Review Credibility |

|

Proven outcomes in similar AI projects aligned with your goals |

Finally, choose a partner that fits your long-term strategy. Join hands with the one who evolves with your AI roadmap and does continuous value addition as your initiative scales.

Conclusion

Outsourcing data preparation is not just about cost savings; it is a strategic necessity for AI teams that need scalability, uncompromised quality, faster development and sustained strategic expansion. By leaving data gathering, cleaning, annotation, and structuring in the hands of specialists, your team can focus on fundamental AI innovation. The appropriate outsourcing partner will enable quicker iterations and manage varied datasets effectively.

Thus, choose a partner with deep knowledge of your industry Automotive, Healthcare, or BFSI and your particular AI applications, such as Generative AI or Computer Vision, and the subtleties of your data types from image to sensor data.

Are you struggling with dirty or incomplete data?

Find out more about proven solutions for scalable, accurate, and cost-effective data preparation.