- Understand how fine-tuning enhances AI model performance without the need for retraining or large datasets

- Explore five techniques to control output behavior at inference time for greater consistency, relevance and task alignment

- Learn why mastering prompt parameter optimization is critical for AI/ML teams aiming to boost model accuracy in real-world applications

Table of Contents

- What is Fine-tuning?

- Pretraining vs Finetuning

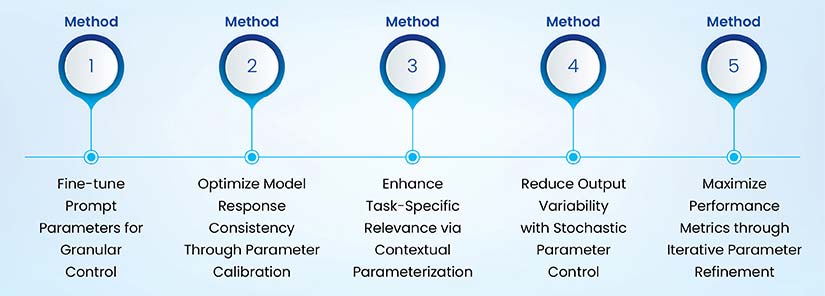

- 5 Ways to Enhance AI Model Performance through Fine-Tuning

- 1: Fine-tune prompt parameters for granular control

- 2: Optimize model response consistency through parameter calibration

- 3. Enhance Task-Specific Relevance via Contextual Parameterization

- 4: Reduce output variability with stochastic parameter control

- 5: Maximize Performance Metrics through Iterative Parameter Refinement

- How will fine-tuning shape the future of AI model performance?

- Conclusion

For companies using AI in critical tasks, getting accurate and reliable results is the key to success. Pre-trained models are powerful, but they often require adjustments to align with your specific needs. That’s where AI model fine tuning comes in.

What is Fine-Tuning?

Fine-tuning involves adjusting a model’s parameters to align its behavior with specific tasks, improving accuracy, relevance, and efficiency.

Of recent, prompt engineering has gained prominence as a tool for guiding the model’s responses more effectively. Together with prompt optimization, which involves testing, refining, and iterating prompts, developers can achieve consistency and accuracy. Fine-tuning, prompt engineering, and optimization form the foundation for building AI systems that deliver intelligent, context-aware, and scalable results.

Pretraining vs Finetuning

Pre-training gives AI its foundational knowledge—like teaching it how to read. Fine-tuning teaches it how to apply that knowledge to specific tasks. Understanding how these two stages work together is key to building smarter, task-ready AI models.

| Aspect | (Pre-)Training | Fine-Tuning |

|---|---|---|

| Starting Point | Random initialization of model parameters. | Pre-trained model with updated weights. |

| Dataset Size | Massive, unlabeled datasets for self-supervised learning. | Small, task-specific datasets. |

| Learning Approach | Broad knowledge through self-prediction or contrastive learning. | Tailored knowledge for specific tasks. |

| Risk of Overfitting | Low due to large dataset diversity. | Higher risk with small datasets. |

| Computational Resources | Requires significant computational power and time. | Computationally efficient and faster. |

| Annotations Needed | Rarely needed; uses pretext tasks for ground truth. | Requires labeled data for task-specific training. |

| Goal | Build foundational knowledge for diverse applications. | Refine model for domain-specific performance. |

| Training Phases | Forward pass and backpropagation across multiple epochs. | Additional training in pre-trained weights. |

| Applications | General-purpose models (e.g., LLMs, CLIP). | Specialized models (e.g., sentiment analysis). |

| Advantages | Enables broad generalization across tasks and domains. | Prevents overfitting while improving specificity. |

Ready to gain control over your AI model’s behavior?

Let our experts help you implement effective prompt fine-tuning strategies.

5 Ways to Enhance AI Model Performance through Fine-Tuning

AI model fine tuning offers a practical path to improving the precision, consistency and usefulness of AI-generated responses. By refining both prompt structure and generation parameters, teams can better align outputs with task-specific needs.

Below are five proven techniques to enhance AI accuracy with fine-tuning.

1: Fine-tune prompt parameters for granular control

A prompt is the set of instructions that plays an instrumental role in determining how an AI model responds. However, to improve AI model performance, it is not about what you say; rather, how you say that counts. Fine-tuning prompt parameters such as structure, tone, context, and keywords offers granular control over how the model behaves.

Granular control lets you:

- Define tone (e.g., friendly vs. professional)

- Set structure (e.g., bullet points, summaries, lists)

- Choose response style (e.g., concise, technical, creative)

- Limit randomness for consistent output

- Align with brand voice or compliance rules

The level of granular control helps businesses use AI more effectively, ensuring that every response feels intentional, accurate, and tailored to the task at hand. Adjusting prompt parameters can shift the model’s behavior from highly creative to laser-focused, from verbose to concise, or from exploratory to factual.

| Scenario | Before: One-Size-Fits-All Output | After: Tailored Output with Parameter Tuning |

|---|---|---|

| Legal Tasks | General response lacking legal structure | Structured, citation-like summary with formal tone |

| Medical Communication | Vague or overly broad response | Empathetic, compliant, and clear medical explanation |

| Financial Analysis | Inconsistent depth and tone | Balanced, risk-aware, and concise financial insights |

| Customer Interactions | Generic and tone-deaf replies | Brand-aligned, consistent, and context-aware messaging |

2: Optimize model response consistency through parameter calibration

In high-stakes industries such as healthcare, legal, and customer service, response consistency is the key. Imagine receiving different information for the same question. That’s where tuning the model’s settings helps. AI models come with built-in randomness to make responses creative and varied. But for tasks that require reliable answers, everything comes down to reducing randomness. This process is called parameter calibration—basically, adjusting—so the AI gives more predictable results.

AI-powered customer service utilizes parameter calibration to provide accurate and reliable answers every time, reducing the unpredictability essential for trust and satisfaction.

Here’s how some of those settings work:

- Temperature: Lower it to make the AI play it safe and choose the likeliest words.

- Top-p (nucleus sampling): Helps the AI stick to a smaller set of smart word choices

- Repetition and presence penalties: Stop the AI from repeating itself or going off topic.

By tweaking these parameters, you make the AI more focused and dependable, based on your specific use case.

Start optimizing your model outputs today.

Explore how fine-tuned prompt parameters can improve accuracy & relevance.

3. Enhance Task-Specific Relevance via Contextual Parameterization

Contextual parameterization technique incorporates domain-centric context and task-related variables directly into prompt structure or model configuration. This LLM fine-tuning method ensures the AI delivers responses that are not only accurate but also relevant to the intended use case.

By tailoring prompts and settings based on the nature of the task, you achieve sharper outputs, better alignment with user needs, and fewer errors.

Here’s how contextual parameterization makes a difference:

- Domain-related Inputs: Insert relevant terminology or data, such as product SKUs, legal codes, or industry-specific templates—to improve accuracy

- Role-Based Behavior: Use system prompts to define the AI’s persona (e.g., “You are a professional Chartered Accountant” or “You are a medical transcriptionist”) to match tone and purpose

- Modular Prompt Logic: Create reusable prompt components that adapt dynamically based on workflow, reducing duplication and improving scalability

- Multi-Use Model Efficiency: Leverage one model across multiple tasks by simply swapping contextual elements—no need for multiple deployments

4: Reduce output variability with stochastic parameter control

Large language models don’t return the same output every time, even with the same prompt, making the outcome probabilistic. While this variation may be helpful in areas requiring creative juice, it’s problematic in regulated industries when consistency is essential.

In such scenarios, stochastic parameter control can come to the rescue. It is the method of adjusting randomness-related settings, such as temperature, top-k, and top-p, to reduce unpredictability and ensure stable, relevant, and repeatable responses.

This level of control becomes crucial in enterprise-level applications, such as compliance documentation or legal contract generation, where variations in output can lead to inconsistencies, reworks, or even risk.

Here’s how key stochastic parameters help:

- Temperature: Lower values (0.0–0.3) force the model to choose likelier responses, increasing consistency and minimizing randomness

- Top-k sampling: Limits output selection to the k most probable tokens, filtering out long-tail possibilities that cause erratic responses

- Top-p (nucleus) sampling: Selects from the pool of smallest tokens whose cumulative probability exceeds p (e.g., 0.9), ultimately balancing relevance and control

- Repetition and presence penalties: Reduce the chance of redundant or off-track outputs

By managing these parameters effectively, AI teams can align outputs with brand tone, regulatory expectations, and operational workflows. It’s about striking the right balance between diversity and dependability.

5: Maximize Performance Metrics through Iterative Parameter Refinement

Iterative parameter refinement plays an instrumental role in ensuring accuracy of AI models. This approach encompasses adjusting and evaluating model parameters mainly.

- Real-world Feedback

- User Behavior

- Performance Metrics (accuracy, response time, or relevance)

The impact of such parameters is regularly evaluated to fine-tune the AI model’s behavior over time, improving both user experience and business outcomes.

Here’s how iterative parameter refinement works in practice:

In this approach, setting parameters is not a one-time activity but instead a continuous and ongoing process.

- Establish Key Metrics: Define the goal/end result., fewer hallucinations, increased user satisfaction, or quicker response generation.

- A/B Test Configurations: Experiment with different temperatures, top-p, or formatting setups to see what delivers better results.

- Analyze Real-World Feedback: Use human-in-the-loop (HITL) reviews, support logs, or user ratings to guide changes.

- Refine Prompt-Parameter Pairs: Adjust prompt wording and refine parameters for better synergy.

- Repeat Continuously: Iterate regularly based on new use cases, user types, or dynamic business goals.

This method turns your AI development process into a feedback loop—constantly learning, improving, and aligning better with changing and volatile market demands.

To address annotation inconsistencies and scalability challenges, a structured prompt engineering workflow was implemented for a leading AI data firm based on continuous feedback loops, quality reviews, and performance monitoring. This resulted in maintaining consistency and achieving high-quality outcomes. Read the full case study »

How will fine-tuning shape the future of AI model performance?

Fine-tuning is evolving to become faster, smarter, and more personalized. The next wave of innovation will focus on automation, efficiency, and deeper alignment with user needs.

- Real-Time Tuning: Automated systems adjust prompt parameters on the fly using reinforcement learning.

- Smarter Use of Data: Techniques like data augmentation and few-shot learning will reduce reliance on massive datasets.

- Personalization at Scale: Models will adapt to specific domains—and even individual users—for more relevant responses.

- On-Device Adaptation: Fine-tuning will happen on local devices for quicker, real-time interactions.

- Responsible AI: Fine-tuning will help reduce bias and improve how clearly we understand AI decisions.

The future isn’t just about better models; it’s about better control. And fine-tuning is the tool that will make that possible.

Conclusion

For AI/ML engineers and technical leaders, mastering prompt parameter optimization is critical for aligning model outputs with task-specific goals—whether in content generation, summarization, question answering, or domain-specific applications.

When it comes to fine tuning GPT models for accuracy, thoughtful parameter control offers a scalable, efficient way to drive precision without altering the model’s core architecture. It’s not just about making models work. It’s about making them work exactly as intended.

Turn your AI from a generalist into a task-specific expert.

Discover how our prompt tuning aligns AI output with your goals.