- Prompt engineering has evolved from a niche skill into a strategic business capability that directly impacts success of AI implementations.

- Effective prompts require clarity, contextual framing, and structured instruction sequencing to improve performance.

- Domain-specific prompt frameworks deliver measurable business value across diverse industries.

Table of Contents

What is prompt engineering, and why is it the secret to unlocking AI’s true potential? In today’s AI-driven world, the right prompt can mean the difference between breakthrough results and costly failures. This guide explains what prompt engineering is, the techniques you can use, and real-world examples to help you maximize ROI from AI systems.

As organizations invest substantial resources in large language models and generative AI systems, they’re discovering that the technology itself represents only half the equation. Many now turn to specialized prompt engineering services to bridge this gap, ensuring that AI models receive clear, context-rich instructions that deliver consistent business outcomes.

Poorly engineered prompts lead to inconsistent outputs, wasted resources, and ultimately, failed initiatives that undermine confidence in AI adoption across the organization. The ability to effectively communicate with these sophisticated systems to bridge human intent with machine understanding is the defining factor of AI success.

Chatbots and virtual assistants used for handling specific inquiries from a particular audience in a lot of industries happens to be a perfect AI prompt engineering example.

The global prompt engineering market is projected to expand at a compound annual growth rate (CAGR) of 32.8% from 2024 to 2030.

Source: grandviewresearch.comIn our comprehensive prompt engineering guide we discuss this evolving discipline, examining how it has transformed from a niche skill into a strategic business imperative in 2025 that directly impacts the success of AI implementations.

What is prompt engineering in AI

Prompt engineering is the practice of designing and refining inputs (prompts) for AI models to generate accurate, relevant, and consistent outputs. It involves structuring instructions with clarity, context, and sequencing so that large language models (LLMs) correctly interpret human intent and deliver reliable results.

What began as improvisational experimentation evolved into a methodical discipline with established frameworks and techniques. Prompt optimization techniques encompass managing context windows, evaluating outputs against specific metrics, and implementing security measures to prevent vulnerabilities like prompt injection and leaking.

Organizations that develop robust capabilities in this area consistently achieve higher accuracy rates, a reduction in human review, and a faster deployment of AI use cases. With AI adoption picking up, prompt engineering has transformed from a technical consideration to a strategic business capability directly impacting ROI.

Why prompt engineering matters

Prompt engineering serves as the critical interface between human intent and machine output in AI systems. As large language models become more integrated into business operations, the ability to craft precise prompts directly influences the relevance, accuracy, and coherence of AI-generated content.

To businesses prompt engineering matters in the following ways:

- Enhanced control: Well-designed prompts establish context and guide AI behavior without requiring model parameter adjustments.

- Improved accuracy: Refined prompts reduce ambiguities, leading to more accurate AI responses.

- Efficiency gains: Teams achieve desired outputs more quickly, enhancing productivity across content creation and data analysis.

- Cross-domain versatility: Prompt engineering techniques apply effectively across marketing, healthcare, and financial services.

- In-context learning: Advanced LLM prompt engineering helps teach models specific tasks rooted to context without extensive retraining.

As models continue improving, prompt engineering remains the essential bridge between human objectives and AI capabilities, making it critical in the AI development lifecycle.

Transform your AI’s efficiency with customized prompts.

Empower your AI systems with our expert prompt creation.

How AI prompt engineering works

At its core, AI prompt engineering is about effectively communicating intent to AI systems. Several key principles form the foundation of good prompt design:

A. Clarity and specificity

Language models perform best when given clear, specific instructions. Effective prompts leave little room for interpretation by:

- Defining the exact task or output format required

- Specifying the tone, style and perspective to adopt

- Establishing clear parameters and constraints

- Providing concrete examples of desired outputs

When prompts lack specificity, AI systems must make assumptions that may not align with user intent.

Example: A vague prompt like “Write about climate change” could yield anything from scientific analysis to political commentary, while “Create a 500-word technical summary of recent advances in carbon capture technology for environmental engineers” provides the clarity needed for consistent, targeted results.

B. Contextual framing

Context substantially influences how AI systems interpret and respond to instructions. Strategic contextual framing involves:

- Establishing relevant background information

- Defining the role or perspective the AI should adopt

- Creating appropriate “mental models” for the AI to operate within

- Maintaining consistent context across multi-turn interactions

The same prompt yields different results depending on contextual framing. You are essentially creating a framework for the AI to operate within.

Example: Asking a model to “analyze this financial data” will produce fundamentally different outputs when prefaced with “You are a forensic accountant investigating fraud” versus “You are a financial advisor helping a client plan for retirement.”

The forensic accountant context will trigger scrutiny of irregularities, unusual transactions, and potential deception patterns, while the financial advisor framing will focus on growth patterns, risk assessment, and future planning opportunities.

C. Instruction sequencing

Modern AI prompt techniques recognize that AI systems process information sequentially, giving greater weight to information presented early in the prompt. Effective sequencing includes:

- Breaking complex tasks into logical steps

- Prioritizing critical instructions for maximum attention

- Using hierarchical structures for multi-part requests

- Implementing clear separators between different components

Example: Consider the following two approaches to request a comprehensive market analysis:

Poorly sequenced: “Create a market analysis for our new product. Include customer segments, competitive landscape, pricing strategy, and distribution channels. The product is a smart home security system with AI capabilities targeting homeowners in urban areas.”

Effectively sequenced: “You are a market research analyst preparing an analysis for a new smart home security system with AI capabilities targeting urban homeowners.

- First, identify 3-4 key customer segments based on demographics and security needs.

- Next, analyze the top 5 competitors in this space, highlighting their strengths and weaknesses.

- Then, recommend a pricing strategy considering both competitor positioning and perceived value.

- Finally, we propose optimal distribution channels with rationale.

Format your analysis with clear section headings and bullet points for key insights.

The second approach in this example places critical context first, breaks down the task into logical steps, prioritizes information in order of importance, and uses clear formatting instructions—resulting in more structured, comprehensive output.

Expand AI reach with our multilingual prompt services

Engage global audiences using AI in multiple languages

Advanced prompt engineering techniques for AI

Several advanced prompting techniques have emerged as particularly effective for enterprise applications. These methods enhance the accuracy, consistency, and usefulness of AI outputs – making them essential tools for organizations looking to unlock real business value from generative AI systems.

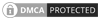

A. Chain-of-thought (COT) prompting

This technique explicitly guides AI through a step-by-step reasoning process, dramatically improving performance on complex tasks requiring logical reasoning. Chain-of-thought prompting creates a structured pathway for the AI to follow as it processes information, making explicit what would otherwise remain implicit in the system’s reasoning.

The approach breaks complex problems into discrete reasoning steps, requests intermediate conclusions, encourages self-verification at critical decision points, and provides a framework for methodical problem solving.

For example, when tasked with analyzing mortgage approval risks, a COT-guided model will explicitly evaluate credit scores, debt-to-income ratios, employment stability, and market conditions before reaching its final recommendation, making each step visible and auditable.

OpenAI’s o1 model, which utilizes CoT reasoning, achieved an 83% score on the International Mathematics Olympiad qualifying exam. This is a substantial improvement compared to the 13% score by its predecessor, GPT-4o.

Source: https://www.reuters.comBy making the reasoning process explicit, organizations gain visibility into how the AI arrived at its conclusions—a critical consideration for auditable decision making and regulatory compliance.

Prompt engineering for ChatGPT and other conversational AI has particularly benefited from this approach, as it helps manage AI model’s tendency to generate conversational but potentially unfocused responses.

B. Few-shot and zero-shot learning

These approaches enable AI systems to perform new tasks with minimal or no specific examples:

- Few-shot learning: Providing some examples to establish patterns

- Zero-shot learning: Leveraging the model’s existing knowledge to perform entirely new tasks

The choice between these approaches depends on the task complexity and model capabilities. Few-shot learning provides concrete examples that establish patterns for the model to follow, while zero-shot learning relies on the model’s preexisting understanding of tasks and instructions.

The most effective prompt optimization techniques often combine these approaches, using minimal examples to clarify expectations while leveraging the model’s existing capabilities.

Common challenges in AI prompt engineering

Effective prompt engineering requires anticipating and addressing key obstacles that impact AI performance, from ensuring factual accuracy to maintaining consistent results as models evolve.

Addressing AI “hallucinations” and factual accuracy

One of the major challenges is ensuring factual accuracy and preventing AI “hallucinations“—confidently stated but incorrect information. Effective strategies include:

- Explicit accuracy instructions: Incorporating specific directives about uncertainty acknowledgment

- Knowledge grounding techniques: Anchoring responses to verifiable information sources

- Self-verification prompting: Instructing the AI to verify its own statements

- Multiple validation approaches: Implementing layered verification systems

Implementing layered approaches that combine multiple techniques rather than relying on any single method ensure highest accuracy.

Managing prompt drift and model updates

As models evolve and receive updates, previously optimized prompts may experience performance degradation—a phenomenon known as “prompt drift.” Mitigation strategies include:

- Testing frameworks: Regularly validating prompt performance against benchmarks.

- Version-specific prompt libraries: Maintaining prompt variants optimized for different model versions.

- Adaptive prompting systems: Implementing frameworks that automatically adjust to model changes.

- Documentation of prompt reasoning: Capturing the intent behind prompt design decisions.

These approaches enable organizations to maintain consistent performance, even as underlying models evolve, ensuring that investments in prompt optimization techniques remain valuable over time rather than requiring constant redevelopment.

Real-world prompt engineering examples driving business value

Prompt engineering use cases demonstrate measurable business value across diverse industries, from content creation to healthcare, by integrating specialized techniques that address sector-specific demands.

A. Content creation and marketing

AI prompt engineering has revolutionized content workflows by enabling automated blog generation, scalable product descriptions, personalized emails, optimized social media, and knowledge base maintenance. Organizations implementing sophisticated prompts that preserve brand voice report 3-5x production capacity increases while maintaining quality standards.

B. Financial services applications

In banking and finance, generative AI prompt engineering prioritizes regulatory compliance, consistent risk assessment frameworks, balanced precision with uncertainty acknowledgment, and strict factual verification. Financial institutions deploy specialized frameworks that incorporate compliance language directly into prompt design, enabling compliant content that delivers valuable insights to stakeholders.

C. Healthcare

Medical applications require AI prompt engineering that adheres to clinical guidelines, incorporates appropriate terminology, distinguishes between diagnostic information and recommendations, and supports evidence-based reasoning. Healthcare organizations implement frameworks with medical knowledge graphs, guidelines and explicit boundaries, enabling valuable information within ethical parameters.

D. Customer experience implementations

AI powered customer support services leverage prompt engineering services to maintain consistent brand voice, adapt to diverse customer segments, handle emotional content appropriately, and seamlessly escalate when necessary. Organizations develop tailored prompt libraries for different personas and scenarios, improving satisfaction while reducing support team workloads.

AI Prompt Solution Enhances Model Performance for AI Training Data Firm

A leading AI training data firm in the US aimed to improve the performance of their AI models by enhancing the quality and scalability of their training data. The client required a solution that could generate high-quality AI prompts to facilitate better model training and performance.

HitechDigital collaborated with the client to develop a scalable AI prompt solution tailored to their specific needs. A dedicated team leveraged advanced AI prompt engineering techniques to create prompts that effectively guided the AI models during training, resulting in improved accuracy and efficiency.

The final deliverables led to:

- Enhanced model performance

- Scalable prompt generation

- Improved training efficiency

Emerging prompt engineering trends

The future of prompt engineering will feature automated optimization systems using machine learning to identify effective patterns, test variations, and implement continuous improvements without human intervention. Several enterprise platforms already employ reinforcement learning to integrate high-performing prompt variations into production systems, reducing manual effort while improving performance.

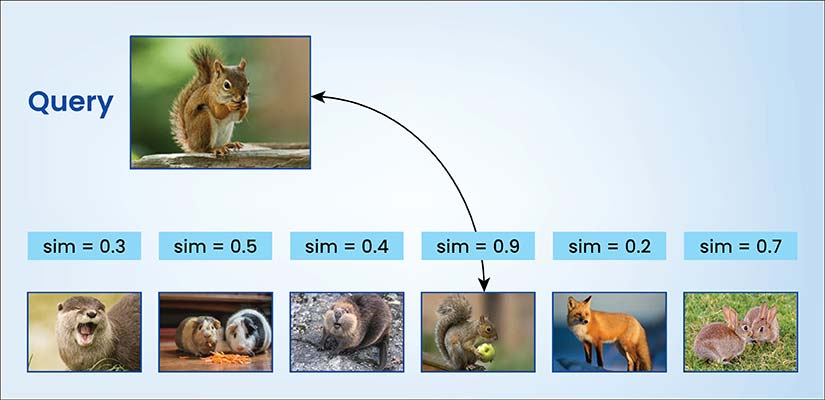

Simultaneously, multi-modal prompt engineering is expanding with the rise of AI systems processing text, images, audio and video. Leading organizations develop frameworks that enable consistent cross-modal instruction techniques, visual prompt methodologies, synchronized multi-channel prompting, and contextual bridging between information types.

Additionally, personalized adaptive prompting represents the next frontier in prompt engineering use cases. Advanced systems adapt to individual communication styles, learn from interaction patterns, maintain user-specific context, and balance personalization with privacy—enabling AI to dynamically adjust communication based on preferences, expertise levels, and interaction history for more natural human-AI collaboration.

Conclusion

In 2025, AI prompt engineering has emerged as a critical strategic capability that directly impacts the ROI of AI investments. For AI implementation leaders facing pressure to demonstrate tangible value from AI initiatives, investing in AI prompt engineering capabilities offers one of the best opportunities to improve performance.

By bridging the gap between human intent and AI understanding, effective prompt engineering transforms sophisticated AI systems from impressive technological showcases into reliable business tools that deliver measurable results.

Forward-thinking organizations actively hire prompt engineers to build competitive advantage and maximize returns on their AI investments. Developing systematic approaches to prompt design, testing and optimization consistently outperform ad hoc methods, helping them achieve higher accuracy, greater consistency, and more valuable business outcomes.

Scale your business with our enterprise AI prompt services

Achieve business goals faster using our AI services