- Prompt engineering boosts LLM performance, ensuring accurate, relevant, and efficient AI outputs.

- Top providers bring technical depth and domain insight, delivering scalable, goal-driven solutions.

- Outsourcing solves key challenges, from limited expertise to the need for faster, smarter AI deployment.

Table of Contents

- Understanding prompt engineering

- What is the role of prompt engineering consulting

- Core competencies of a high-quality prompt engineering service provider

- Expertise in diverse LLMs and AI models

- Technical proficiency and tool usage

- Custom prompt development capabilities

- Transparent, iterative workflow

- Multimodal and multi-LLM experience

- Domain-specific use case understanding

- Integration support and scalability

- Pricing transparency and engagement models

- Why AI and ML companies outsource prompt engineering: key challenges

- How to choose the best prompt engineering service provider

- Conclusion

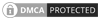

As large language models (LLMs) like ChatGPT, Claude, and Gemini become integral to business operations, the demand for prompt engineering consulting keeps accelerating. The global prompt engineering market is experiencing significant growth, projected to reach USD 7,071.8 million by 2034.

This rapid rise is driven by advancements in generative AI and the need for optimized interactions with AI systems. Prompts designed by experts distinguish high-performing AI outputs from mediocre or average ones. From custom prompt development to LLM prompt optimization services, businesses are investing in AI prompt engineering services to unlock smarter, more reliable results.

However, as the market expands, so does the challenge of finding a skilled and strategic prompt engineering service provider. This article will help you navigate your options, whether you’re looking to hire a prompt engineering expert or to partner with the best prompt engineering agency and ensure real ROI.

Understanding prompt engineering

Prompt engineering is the meticulous process of designing and refining input prompts to guide AI models, particularly large language models (LLMs), towards generating precise and relevant outputs.

It’s more than just asking a question; it’s about strategically crafting instructions tailored to the specific nuances of models like ChatGPT. While basic prompt writing might yield rudimentary results, advanced prompt engineering services explore the complexities of AI interactions, leveraging techniques like few-shot learning, chain-of-thought prompting, and parameter tuning.

This discipline acknowledges that each AI model has unique characteristics that require distinct approaches. The value of this practice lies in its ability to unlock the true potential of LLMs.

Effective prompt engineering can lead to substantial benefits, including cost reduction by minimizing iterations, enhanced output quality with increased accuracy and relevance, and streamlined workflows. By mastering this skill, businesses can optimize their AI investments and achieve superior results.

What is the role of prompt engineering consulting

The role of prompt engineering consulting is pivotal in bridging the gap between raw AI potential and tangible business outcomes. Consultants in this field act as strategic partners, guiding organizations through the complexities of LLM interactions.

They analyze specific business needs, translate them into optimized prompts, and ensure AI outputs align with strategic goals. By leveraging their expertise, they mitigate the risks of inefficient AI usage, reducing the costs associated with iterative trial-and-error.

They also provide crucial insights into emerging AI trends, helping businesses stay ahead of the curve. Ultimately, prompt engineering consulting maximizes ROI by unlocking the full power of AI, driving efficiency, innovation and competitive advantage.

Core competencies of a prompt engineering service provider

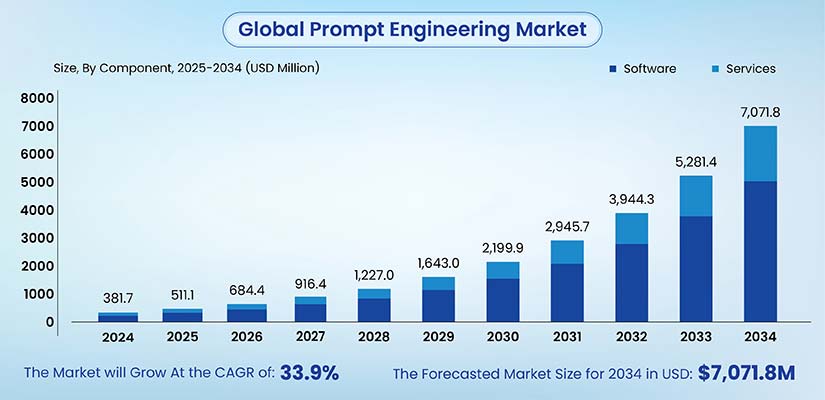

Choosing the right prompt engineering consulting partner is more than just hiring someone who can write good prompts. You need a strategic collaborator who brings technical depth, model versatility, domain insight, and a commitment to continuous improvement.

Selecting the right prompt engineering service provider is crucial for maximizing your AI investments. Here’s a breakdown of the core competencies to look for:

Expertise in diverse LLMs and AI models

A top-tier prompt engineering service provider should demonstrate hands-on experience with a variety of large language models. This includes not just ChatGPT but also Claude, Gemini, Llama, Mistral and open-source models like Falcon or GPT-Neo. This expertise is essential because each LLM processes language differently and has varied token limitations, context windows, and behaviour patterns.

Prompts that work well with GPT-4 may not perform as expected with Claude 3. A provider fluent across multiple models can adapt and tailor prompts to suit the model’s architecture, leading to better results. This expertise helps in choosing and configuring the right model for your use case. It leads to faster responses, more accurate outputs and a smoother user experience. Skilled providers also understand how to reduce model bias and improve the performance of specific tasks. Whether you’re building a customer-facing chatbot or an internal automation tool, model-specific optimization boosts both accuracy and efficiency.

A retail company using ChatGPT for customer support automation switched to Claude for longer memory. The service provider’s ability to optimize prompts for Claude’s extended context window improved query resolution.

Technical proficiency and tool usage

Beyond creative prompt writing, prompt engineering consulting requires strong technical skills. This includes proficiency in Python, API integration, the use of prompt evaluation tools like PromptLayer or LangChain, and experience with advanced techniques like fine-tuning AI models and RAG (retrieval-augmented generation) pipelines.

Prompt engineering isn’t just about writing text. It often involves chaining prompts, integrating external APIs and building real-time, dynamic AI systems. Technically skilled providers can automate prompt workflows, integrate with existing systems, and reduce manual intervention. This leads to faster deployment, streamlined operations and better scalability of AI applications. A technically adept prompt engineering agency also ensures your models are used effectively and securely, avoiding misuse or inefficiencies.

A healthcare tech company required HIPAA-compliant prompt testing. Their provider built automated test scripts, securely logged outputs, and reduced compliance errors.

Custom prompt development capabilities

The ability to create custom prompts tailored to your industry, goals and tone is a key differentiator. Generic, off-the-shelf prompts often fail to fully leverage the capabilities of large language models.

Custom prompts align with your brand voice, use case, and workflow, resulting in more relevant, human-like, and actionable outputs. Personalized prompts lead to better user engagement, fewer misunderstandings and more efficient use of tokens, lowering API costs. By analyzing specific user needs, a skilled provider can create highly accurate, context-aware prompts. This reduces the need for repeated iterations and speeds up time to value, ultimately enhancing user satisfaction and trust in your AI solution.

An edtech platform offering AI-based tutoring improved student feedback after switching to role-specific prompts that mimicked a real teacher’s tone and instructional style.

Transparent, iterative workflow

A reliable prompt engineering company should follow a clear, collaborative process. This includes ideation, prototyping, testing, feedback collection and continuous refinement. LLMs are constantly evolving.

Prompt engineering isn’t a one-time task, it requires ongoing iteration to stay effective and aligned with changing model behaviour. An iterative workflow allows for ongoing prompt refinement, clear collaboration between teams, reduced ambiguity, and better alignment with user expectations. You gain visibility into what’s being developed, how it’s tested, and how it improves, resulting in reduced risks, better AI performance, and increased user trust.

A legal tech firm worked with a provider that iterated on prompts over 15 cycles. The final version delivered a 93% match with human-verified legal summaries, significantly improving content reliability and efficiency.

Multimodal and multi-LLM experience

As AI models evolve, they increasingly support multiple input types, including text, images, audio and code. In this context, it’s essential to work with a prompt engineering service provider that understands how to craft prompts across these diverse formats.

Prompts for text-only models are vastly different from those used for image generation (e.g., DALL·E) or multimodal models like Gemini. A deep understanding of these differences ensures high-quality outputs across formats. Multimodal and multi-LLM expertise enables the development of advanced, integrated AI applications that deliver more engaging and interactive user experiences. It also supports expansion into new use cases, such as visual searches, catalog automation, and voice-enabled interfaces. This capability allows businesses to build more versatile and intelligent systems that cater to a broader range of user needs.

An online apparel brand uses multimodal prompt engineering to generate product pairings with styled images and descriptions. The provider crafted prompts that combined visual elements with brand tone, enabling the brand to produce complete outfit suggestions at scale—enhancing user engagement and driving more conversions.

Domain-specific use case understanding

Deep industry knowledge adds significant value to prompt engineering. A provider with experience in sectors like healthcare, finance, education, law, or marketing can craft prompts that account for technical, regulatory, and linguistic nuances.

Domain knowledge reduces the need for constant clarification, speeds up prompt creation, and ensures the AI delivers outputs that are contextually accurate and highly relevant. When AI prompts reflect industry-specific standards and language, the results are more reliable, actionable and aligned with real-world expectations. This minimizes the risk of hallucinations or misinterpretations, especially in sensitive areas and supports better decision-making. With the right expertise, AI becomes a trusted tool rather than a liability.

A fintech company needed prompts to summarize complex compliance regulations. A domain-aware provider crafted prompts based on region-specific financial laws, thus reducing audit preparation time.

Integration support and scalability

Prompt engineering delivers real value only when it’s integrated into real-world systems. A quality provider not only crafts effective prompts, but also ensures they’re embedded seamlessly into your apps, dashboards, chatbots or API-driven workflows—and that the architecture is built to scale.

Even the best-engineered prompt can fall short if it’s not aligned with real-time data, user context, or backend processes. Poor integration limits functionality and reduces performance. Strong integration and scalability enable the efficient deployment of AI solutions that can grow with your business. This leads to minimal disruption, increased operational efficiency and faster time to value. With real-time adaptability and a futureproof infrastructure, your AI investments stay relevant and effective over time.

A SaaS company implemented prompt chaining with Zapier and OpenAI to automate client onboarding. The setup scaled effortlessly, handling over 2,000 requests per month, with zero failures post-integration.

Pricing transparency and engagement models

Cost predictability is crucial when investing in prompt engineering services. A reliable provider should offer clear pricing structures, pilot programs, and flexible engagement models, whether hourly, fixed-rate, or subscription-based.

Unclear billing, vague deliverables, or hidden fees can quickly inflate project costs and erode ROI. Transparency from the start builds trust and ensures aligned expectations. With transparent pricing, you can budget effectively and understand exactly what you’re paying for, whether it’s custom prompt libraries, consulting time, or API optimization. Flexible engagement models also prevent vendor lock-in and support long-term collaboration. This approach fosters trust, facilitates continuous improvement, and helps you scale AI solutions with confidence.

A startup partnered with a provider offering prompt libraries on a usage-based model. As their needs expanded, they paid only for incremental improvements, ultimately reducing total engineering costs.

Why AI and ML companies outsource prompt engineering: key challenges

Before choosing a provider, it’s important to understand why many AI and ML companies are turning to external prompt engineering experts. Here are the key challenges that often lead to outsourcing.

- Lack of In-House Expertise – Many teams lack specialists who understand model-specific prompt behaviour and optimization techniques.

- Time-Intensive Prompt Iteration – Effective prompt engineering requires multiple testing cycles, which can slow down internal development timelines.

- Rapid Evolution of LLMs – Frequent updates and new model releases make it hard for in-house teams to stay current and adapt quickly.

- Scaling Across Use Cases – Managing consistent, high-quality prompts across multiple applications (chatbots, RAG) can overwhelm internal resources.

- Limited Access to Tools and Frameworks – Many companies don’t have access to or experience with prompt testing, evaluation, and optimization tools.

- Inconsistent AI Output Quality – Poorly structured prompts lead to hallucinations, bias or irrelevant outputs, impacting performance and trust.

- Difficulty in Aligning Prompts with Business Goals – Internal teams may struggle to translate strategic goals into effective AI instructions.

- High Cost of Trial and Error – Experimenting without expertise can lead to high API usage and increased operational costs.

- Complex Multimodal Use Cases – Teams often lack the skills to engineer prompts for image, video or code-based models.

- Need for Faster Go-to-Market – Outsourcing accelerates delivery, especially for startups and companies under pressure to launch AI-powered features quickly.

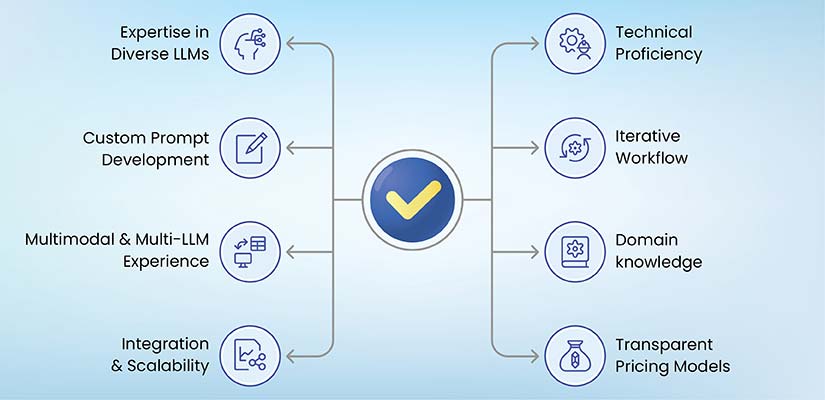

How to choose the best prompt engineering service provider

Before choosing a prompt engineering service provider, it’s important to understand why so many AI and ML companies are hiring prompt engineering experts. Here are the key challenges that they face which makes outsourced prompt engineering a smart move for them:

Conclusion

As generative AI continues to evolve, the role of prompt engineering becomes increasingly critical in unlocking accurate, efficient and goal-aligned outputs from LLMs. Choosing the right prompt engineering service provider is not just about technical skill; it’s about finding a partner who understands your business, adapts to emerging models, and delivers scalable, custom solutions. From core competencies to transparent workflows, the right provider can drive real ROI and future-proof your AI initiatives. By staying mindful of red flags and asking the right questions, you can confidently invest in a prompt engineering partner who will elevate your AI strategy.

Power Up Your AI with Expert Prompt Engineering

Maximize output quality and model performance with custom-built prompts.